| Commit message (Collapse) | Author | Age | Files | Lines |

|---|

| |

|

|

|

|

| |

In Jaeger:

- Before: huge list of uncategorized database calls

- After: nice and collapsible into units of work

|

| |

|

|

| |

#13404 removed an import of `Optional` which was still needed

due to #13413 added more usages.

|

| |

|

|

|

|

|

|

| |

Previously, `_resolve_state_at_missing_prevs` returned the resolved

state before an event and a partial state flag. These were unwieldy to

carry around would only ever be used to build an event context. Build

the event context directly instead.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

| |

Make sure that we re-check the auth rules during state resync, otherwise

rejected events get un-rejected.

|

| |

|

|

|

| |

Make sure that we only pull out events from the db once they have no

prev-events with partial state.

|

| |

|

|

|

|

|

|

|

| |

(#13355)

Avoid blocking on full state in `_resolve_state_at_missing_prevs` and

return a new flag indicating whether the resolved state is partial.

Thread that flag around so that it makes it into the event context.

Co-authored-by: Richard van der Hoff <1389908+richvdh@users.noreply.github.com>

|

| |

|

|

|

|

|

|

|

| |

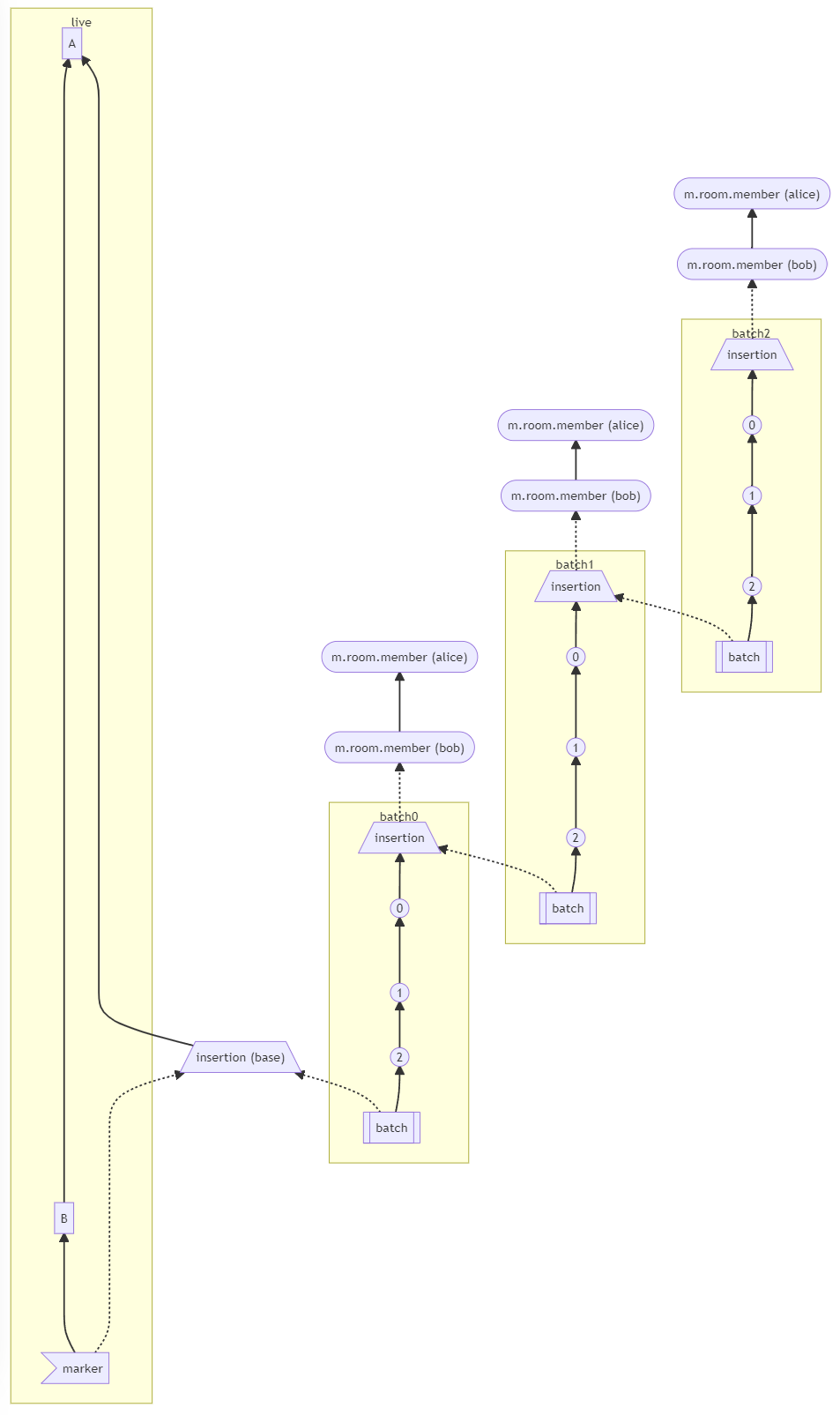

(#13205)

Depends on https://github.com/matrix-org/synapse/pull/13320

Complement tests: https://github.com/matrix-org/complement/pull/406

We could use the same method to backfill for `/context` as well in the future, see https://github.com/matrix-org/synapse/issues/3848

|

| |

|

|

|

|

|

|

|

|

|

|

| |

When a room has the partial state flag, we may not have an accurate

`m.room.member` event for event senders in the room's current state, and

so cannot perform soft fail checks correctly. Skip the soft fail check

entirely in this case.

As an alternative, we could block until we have full state, but that

would prevent us from receiving incoming events over federation, which

is undesirable.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

| |

Update `get_pdu` to return the untouched, pristine `EventBase` as it was originally seen over federation (no metadata added). Previously, we returned the same `event` reference that we stored in the cache which downstream code modified in place and added metadata like setting it as an `outlier` and essentially poisoned our cache. Now we always return a copy of the `event` so the original can stay pristine in our cache and re-used for the next cache call.

Split out from https://github.com/matrix-org/synapse/pull/13205

As discussed at:

- https://github.com/matrix-org/synapse/pull/13205#discussion_r918365746

- https://github.com/matrix-org/synapse/pull/13205#discussion_r918366125

Related to https://github.com/matrix-org/synapse/issues/12584. This PR doesn't fix that issue because it hits [`get_event` which exists from the local database before it tries to `get_pdu`](https://github.com/matrix-org/synapse/blob/7864f33e286dec22368dc0b11c06eebb1462a51e/synapse/federation/federation_client.py#L581-L594).

|

| | |

|

| | |

|

| |

|

|

|

|

|

| |

There is a corner in `_check_event_auth` (long known as "the weird corner") where, if we get an event with auth_events which don't match those we were expecting, we attempt to resolve the diffence between our state and the remote's with a state resolution.

This isn't specced, and there's general agreement we shouldn't be doing it.

However, it turns out that the faster-joins code was relying on it, so we need to introduce something similar (but rather simpler) for that.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Whenever we want to persist an event, we first compute an event context,

which includes the state at the event and a flag indicating whether the

state is partial. After a lot of processing, we finally try to store the

event in the database, which can fail for partial state events when the

containing room has been un-partial stated in the meantime.

We detect the race as a foreign key constraint failure in the data store

layer and turn it into a special `PartialStateConflictError` exception,

which makes its way up to the method in which we computed the event

context.

To make things difficult, the exception needs to cross a replication

request: `/fed_send_events` for events coming over federation and

`/send_event` for events from clients. We transport the

`PartialStateConflictError` as a `409 Conflict` over replication and

turn `409`s back into `PartialStateConflictError`s on the worker making

the request.

All client events go through

`EventCreationHandler.handle_new_client_event`, which is called in

*a lot* of places. Instead of trying to update all the code which

creates client events, we turn the `PartialStateConflictError` into a

`429 Too Many Requests` in

`EventCreationHandler.handle_new_client_event` and hope that clients

take it as a hint to retry their request.

On the federation event side, there are 7 places which compute event

contexts. 4 of them use outlier event contexts:

`FederationEventHandler._auth_and_persist_outliers_inner`,

`FederationHandler.do_knock`, `FederationHandler.on_invite_request` and

`FederationHandler.do_remotely_reject_invite`. These events won't have

the partial state flag, so we do not need to do anything for then.

The remaining 3 paths which create events are

`FederationEventHandler.process_remote_join`,

`FederationEventHandler.on_send_membership_event` and

`FederationEventHandler._process_received_pdu`.

We can't experience the race in `process_remote_join`, unless we're

handling an additional join into a partial state room, which currently

blocks, so we make no attempt to handle it correctly.

`on_send_membership_event` is only called by

`FederationServer._on_send_membership_event`, so we catch the

`PartialStateConflictError` there and retry just once.

`_process_received_pdu` is called by `on_receive_pdu` for incoming

events and `_process_pulled_event` for backfill. The latter should never

try to persist partial state events, so we ignore it. We catch the

`PartialStateConflictError` in `on_receive_pdu` and retry just once.

Refering to the graph of code paths in

https://github.com/matrix-org/synapse/issues/12988#issuecomment-1156857648

may make the above make more sense.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

| |

`_check_event_auth` is expected to raise `AuthError`s, so no need to log it

again.

|

| |

|

|

|

|

|

|

|

| |

When we fail to persist a federation event, we kick off a task to remove

its push actions in the background, using the current logging context.

Since we don't `await` that task, we may finish our logging context

before the task finishes. There's no reason to not `await` the task, so

let's do that.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Add auth events to events used in tests

* Move some event auth checks out to a different method

Some of the event auth checks apply to an event's auth_events, rather than the

state at the event - which means they can play no part in state

resolution. Move them out to a separate method.

* Rename check_auth_rules_for_event

Now it only checks the state-dependent auth rules, it needs a better name.

|

| |\ |

|

| | |

| |

| |

| |

| |

| |

| | |

Instead, use the `room_version` property of the event we're checking.

The `room_version` was originally added as a parameter somewhere around #4482,

but really it's been redundant since #6875 added a `room_version` field to `EventBase`.

|

| | |

| |

| |

| |

| |

| |

| | |

Instead, use the `room_version` property of the event we're validating.

The `room_version` was originally added as a parameter somewhere around #4482,

but really it's been redundant since #6875 added a `room_version` field to `EventBase`.

|

| |/

|

|

| |

... to help us keep track of these things

|

| | |

|

| |

|

|

|

|

|

| |

partial-state room (#12812)

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| | |

|

| | |

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Sending marker events as state now so they are always able to be seen by homeservers (not lost in some timeline gap).

Part of [MSC2716](https://github.com/matrix-org/matrix-spec-proposals/pull/2716)

Complement tests: https://github.com/matrix-org/complement/pull/371

As initially discussed at https://github.com/matrix-org/matrix-spec-proposals/pull/2716#discussion_r782629097 and https://github.com/matrix-org/matrix-spec-proposals/pull/2716#discussion_r876684431

When someone joins a room, process all of the marker events we see in the current state. Marker events should be sent with a unique `state_key` so that they can all resolve in the current state to easily be discovered. Marker events as state

- If we re-use the same `state_key` (like `""`), then we would have to fetch previous snapshots of state up through time to find all of the marker events. This way we can avoid all of that. This PR was originally doing this but then thought of the smarter way to tackle in an [out of band discussion with @erikjohnston](https://docs.google.com/document/d/1JJDuPfcPNX75fprdTWlxlaKjWOdbdJylbpZ03hzo638/edit#bookmark=id.sm92fqyq7vpp).

- Also avoids state resolution conflicts where only one of the marker events win

As a homeserver, when we see new marker state, we know there is new history imported somewhere back in time and should process it to fetch the insertion event where the historical messages are and set it as an insertion extremity. This way we know where to backfill more messages when someone asks for scrollback.

|

| |

|

|

| |

accept state filters and update calls where possible (#12791)

|

| |

|

|

| |

It simply passes through to `BulkPushRuleEvaluator`, which can be

called directly instead.

|

| |

|

|

|

|

|

|

|

|

| |

Refactor how the `EventContext` class works, with the intention of reducing the amount of state we fetch from the DB during event processing.

The idea here is to get rid of the cached `current_state_ids` and `prev_state_ids` that live in the `EventContext`, and instead defer straight to the database (and its caching).

One change that may have a noticeable effect is that we now no longer prefill the `get_current_state_ids` cache on a state change. However, that query is relatively light, since its just a case of reading a table from the DB (unlike fetching state at an event which is more heavyweight). For deployments with workers this cache isn't even used.

Part of #12684

|

| | |

|

| |

|

|

|

|

| |

When we join a room via the faster-joins mechanism, we end up with "partial

state" at some points on the event DAG. Many parts of the codebase need to

wait for the full state to load. So, we implement a mechanism to keep track of

which events have partial state, and wait for them to be fully-populated.

|

| |

|

|

|

| |

We work through all the events with partial state, updating the state at each

of them. Once it's done, we recalculate the state for the whole room, and then

mark the room as having complete state.

|

| |

|

|

|

|

|

|

|

|

|

| |

Refactor and convert `Linearizer` to async. This makes a `Linearizer`

cancellation bug easier to fix.

Also refactor to use an async context manager, which eliminates an

unlikely footgun where code that doesn't immediately use the context

manager could forget to release the lock.

Signed-off-by: Sean Quah <seanq@element.io>

|

| |

|

| |

If we're missing most of the events in the room state, then we may as well call the /state endpoint, instead of individually requesting each and every event.

|

| |

|

|

| |

Do not attempt to send remote joins out over federation. Normally, it will do

nothing; occasionally, it will do the wrong thing.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

When we get a partial_state response from send_join, store information in the

database about it:

* store a record about the room as a whole having partial state, and stash the

list of member servers too.

* flag the join event itself as having partial state

* also, for any new events whose prev-events are partial-stated, note that

they will *also* be partial-stated.

We don't yet make any attempt to interpret this data, so API calls (and a bunch

of other things) are just going to get incorrect data.

|

| |

|

|

|

|

|

| |

The presence of this method was confusing, and mostly present for backwards

compatibility. Let's get rid of it.

Part of #11733

|

| |

|

|

|

|

|

|

|

|

|

| |

msc3706 proposes changing the `/send_join` response:

> Any events returned within `state` can be omitted from `auth_chain`.

Currently, we rely on `m.room.create` being returned in `auth_chain`, but since

the `m.room.create` event must necessarily be part of the state, the above

change will break this.

In short, let's look for `m.room.create` in `state` rather than `auth_chain`.

|

| |

|

|

|

| |

We already have two copies of this code, in 2/3 of the callers of

`_auth_and_persist_outliers`. Before I add a third, let's push it down.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

(MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

|

| |

|

|

|

|

|

| |

I've never found this terribly useful. I think it was added in the early days

of Synapse, without much thought as to what would actually be useful to log,

and has just been cargo-culted ever since.

Rather, it tends to clutter up debug logs with useless information.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* `_auth_and_persist_outliers`: mark persisted events as outliers

Mark any events that get persisted via `_auth_and_persist_outliers` as, well,

outliers.

Currently this will be a no-op as everything will already be flagged as an

outlier, but I'm going to change that.

* `process_remote_join`: stop flagging as outlier

The events are now flagged as outliers later on, by `_auth_and_persist_outliers`.

* `send_join`: remove `outlier=True`

The events created here are returned in the result of `send_join` to

`FederationHandler.do_invite_join`. From there they are passed into

`FederationEventHandler.process_remote_join`, which passes them to

`_auth_and_persist_outliers`... which sets the `outlier` flag.

* `get_event_auth`: remove `outlier=True`

stop flagging the events returned by `get_event_auth` as outliers. This method

is only called by `_get_remote_auth_chain_for_event`, which passes the results

into `_auth_and_persist_outliers`, which will flag them as outliers.

* `_get_remote_auth_chain_for_event`: remove `outlier=True`

we pass all the events into `_auth_and_persist_outliers`, which will now flag

the events as outliers.

* `_check_sigs_and_hash_and_fetch`: remove unused `outlier` parameter

This param is now never set to True, so we can remove it.

* `_check_sigs_and_hash_and_fetch_one`: remove unused `outlier` param

This is no longer set anywhere, so we can remove it.

* `get_pdu`: remove unused `outlier` parameter

... and chase it down into `get_pdu_from_destination_raw`.

* `event_from_pdu_json`: remove redundant `outlier` param

This is never set to `True`, so can be removed.

* changelog

* update docstring

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Events returned by `backfill` should not be flagged as outliers.

Fixes:

```

AssertionError: null

File "synapse/handlers/federation.py", line 313, in try_backfill

dom, room_id, limit=100, extremities=extremities

File "synapse/handlers/federation_event.py", line 517, in backfill

await self._process_pulled_events(dest, events, backfilled=True)

File "synapse/handlers/federation_event.py", line 642, in _process_pulled_events

await self._process_pulled_event(origin, ev, backfilled=backfilled)

File "synapse/handlers/federation_event.py", line 669, in _process_pulled_event

assert not event.internal_metadata.is_outlier()

```

See https://sentry.matrix.org/sentry/synapse-matrixorg/issues/231992

Fixes #8894.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Push `get_room_{min,max_stream_ordering}` into StreamStore

Both implementations of this are identical, so we may as well push it down and

get rid of the abstract base class nonsense.

* Remove redundant `StreamStore` class

This is empty now

* Remove redundant `get_current_events_token`

This was an exact duplicate of `get_room_max_stream_ordering`, so let's get rid

of it.

* newsfile

|

| |

|

| |

as of #11012, these parameters are unused.

|

| | |

|

| |

|

| |

Co-authored-by: Patrick Cloke <clokep@users.noreply.github.com>

|

| |

|

| |

Co-authored-by: Andrew Morgan <1342360+anoadragon453@users.noreply.github.com>

|

| |

|

|

|

|

|

| |

This is the final piece of the jigsaw for #9595. As with other changes before this one (eg #10771), we need to make sure that we auth the auth events in the right order, and actually check that their predecessors haven't been rejected.

To do this I've reused the existing code we use when persisting outliers elsewhere.

I've removed the code for attempting to fetch missing auth_events - the events should have been present in the send_join response, so the likely reason they are missing is that we couldn't verify them, so requesting them again is unlikely to help. Instead, we simply drop any state which relies on those auth events, as we do at a backwards-extremity. See also matrix-org/complement#216 for a test for this.

|

| |

|

| |

Remove some redundant code, and generally simplify.

|

| |

|

|

|

| |

This is just a lift-and-shift, because it fits more naturally here. We do

rename it to `process_remote_join` at the same time though.

|

| |

|

|

| |

... to `_auth_and_persist_outliers`, since that reflects its purpose better.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Currently, when we receive an event whose auth_events differ from those we expect, we state-resolve between the two state sets, and check that the event passes auth based on the resolved state.

This means that it's possible for us to accept events which don't pass auth at their declared auth_events (or where the auth events themselves were rejected), leading to problems down the line like #10083.

This change means we will:

* ignore any events where we cannot find the auth events

* reject any events whose auth events were rejected

* reject any events which do not pass auth at their declared auth_events.

Together with a whole raft of previous work, this is a partial fix to #9595.

Fixes #6643.

Based on #11009.

|

| |

|

|

|

|

|

|

|

|

|

| |

This fixes a bug where we would accept an event whose `auth_events` include

rejected events, if the rejected event was shadowed by another `auth_event`

with same `(type, state_key)`.

The approach is to pass a list of auth events into

`check_auth_rules_for_event` instead of a dict, which of course means updating

the call sites.

This is an extension of #10956.

|

| |

|

|

|

| |

Make sure that we correctly handle rooms where we do not yet have a

`min_depth`, and also add some comments and logging.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

extremities (#11027)

Found while working on the Gitter backfill script and noticed

it only happened after we sent 7 batches, https://gitlab.com/gitterHQ/webapp/-/merge_requests/2229#note_665906390

When there are more than 5 backward extremities for a given depth,

backfill will throw an error because we sliced the extremity list

to 5 but then try to iterate over the full list. This causes

us to look for state that we never fetched and we get a `KeyError`.

Before when calling `/messages` when there are more than 5 backward extremities:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/synapse/http/server.py", line 258, in _async_render_wrapper

callback_return = await self._async_render(request)

File "/usr/local/lib/python3.8/site-packages/synapse/http/server.py", line 446, in _async_render

callback_return = await raw_callback_return

File "/usr/local/lib/python3.8/site-packages/synapse/rest/client/room.py", line 580, in on_GET

msgs = await self.pagination_handler.get_messages(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/pagination.py", line 396, in get_messages

await self.hs.get_federation_handler().maybe_backfill(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 133, in maybe_backfill

return await self._maybe_backfill_inner(room_id, current_depth, limit)

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 386, in _maybe_backfill_inner

likely_extremeties_domains = get_domains_from_state(states[e_id])

KeyError: '$zpFflMEBtZdgcMQWTakaVItTLMjLFdKcRWUPHbbSZJl'

```

|

| |

|

| |

Include the event ids being peristed

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

versions (#10962)

We correctly allowed using the MSC2716 batch endpoint for

the room creator in existing room versions but accidentally didn't track

the events because of a logic flaw.

This prevented you from connecting subsequent chunks together because it would

throw the unknown batch ID error.

We only want to process MSC2716 events when:

- The room version supports MSC2716

- Any room where the homeserver has the `msc2716_enabled` experimental feature enabled and the event is from the room creator

|

| |

|

|

|

| |

`_check_event_auth` is only called in two places, and only one of those sets

`send_on_behalf_of`. Warming the cache isn't really part of auth anyway, so

moving it out makes a lot more sense.

|

| |

|

| |

Add some more comments about wtf is going on here.

|

| |

|

|

|

|

| |

There's little point in doing a fancy state reconciliation dance if the event

itself is invalid.

Likewise, there's no point checking it again in `_check_for_soft_fail`.

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

Broadly, the existing `event_auth.check` function has two parts:

* a validation section: checks that the event isn't too big, that it has the rught signatures, etc.

This bit is independent of the rest of the state in the room, and so need only be done once

for each event.

* an auth section: ensures that the event is allowed, given the rest of the state in the room.

This gets done multiple times, against various sets of room state, because it forms part of

the state res algorithm.

Currently, this is implemented with `do_sig_check` and `do_size_check` parameters, but I think

that makes everything hard to follow. Instead, we split the function in two and call each part

separately where it is needed.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Inline `_check_event_auth` for outliers

When we are persisting an outlier, most of `_check_event_auth` is redundant:

* `_update_auth_events_and_context_for_auth` does nothing, because the

`input_auth_events` are (now) exactly the event's auth_events,

which means that `missing_auth` is empty.

* we don't care about soft-fail, kicking guest users or `send_on_behalf_of`

for outliers

... so the only thing that matters is the auth itself, so let's just do that.

* `_auth_and_persist_fetched_events_inner`: de-async `prep`

`prep` no longer calls any `async` methods, so let's make it synchronous.

* Simplify `_check_event_auth`

We no longer need to support outliers here, which makes things rather simpler.

* changelog

* lint

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Factor more stuff out of `_get_events_and_persist`

It turns out that the event-sorting algorithm in `_get_events_and_persist` is

also useful in other circumstances. Here we move the current

`_auth_and_persist_fetched_events` to `_auth_and_persist_fetched_events_inner`,

and then factor the sorting part out to `_auth_and_persist_fetched_events`.

* `_get_remote_auth_chain_for_event`: remove redundant `outlier` assignment

`get_event_auth` returns events with the outlier flag already set, so this is

redundant (though we need to update a test where `get_event_auth` is mocked).

* `_get_remote_auth_chain_for_event`: move existing-event tests earlier

Move a couple of tests outside the loop. This is a bit inefficient for now, but

a future commit will make it better. It should be functionally identical.

* `_get_remote_auth_chain_for_event`: use `_auth_and_persist_fetched_events`

We can use the same codepath for persisting the events fetched as part of an

auth chain as for those fetched individually by `_get_events_and_persist` for

building the state at a backwards extremity.

* `_get_remote_auth_chain_for_event`: use a dict for efficiency

`_auth_and_persist_fetched_events` sorts the events itself, so we no longer

need to care about maintaining the ordering from `get_event_auth` (and no

longer need to sort by depth in `get_event_auth`).

That means that we can use a map, making it easier to filter out events we

already have, etc.

* changelog

* `_auth_and_persist_fetched_events`: improve docstring

|

| |

|

|

|

| |

Combine the two loops over the list of events, and hence get rid of

`_NewEventInfo`. Also pass the event back alongside the context, so that it's

easier to process the result.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

`_update_auth_events_and_context_for_auth` (#10884)

* Reload auth events from db after fetching and persisting

In `_update_auth_events_and_context_for_auth`, when we fetch the remote auth

tree and persist the returned events: load the missing events from the database

rather than using the copies we got from the remote server.

This is mostly in preparation for additional refactors, but does have an

advantage in that if we later get around to checking the rejected status, we'll

be able to make use of it.

* Factor out `_get_remote_auth_chain_for_event` from `_update_auth_events_and_context_for_auth`

* changelog

|

| |

|

|

|

|

| |

Constructing an EventContext for an outlier is actually really simple, and

there's no sense in going via an `async` method in the `StateHandler`.

This also means that we can resolve a bunch of FIXMEs.

|

| |

|

|

|

|

|

| |

Adds missing type hints to methods in the synapse.handlers

module and requires all methods to have type hints there.

This also removes the unused construct_auth_difference method

from the FederationHandler.

|

| |

|

|

| |

Instead of proxying through the magic getter of the RootConfig

object. This should be more performant (and is more explicit).

|

| |

|

| |

This is only called in two places, and the code seems much clearer without it.

|

| |

|

|

| |

I think I have finally teased apart the codepaths which handle outliers, and those that handle non-outliers.

Let's add some assertions to demonstrate my newfound knowledge.

|

| |

|

|

|

|

|

| |

If we're persisting an event E which has auth_events A1, A2, then we ought to make sure that we correctly auth

and persist A1 and A2, before we blindly accept E.

This PR does part of that - it persists the auth events first - but it does not fully solve the problem, because we

still don't check that the auth events weren't rejected.

|

| | |

|

| |

|

|

| |

It's now only used in a couple of places, so we can drop it altogether.

|

| |

|

| |

This is part of my ongoing war against BaseHandler. I've moved kick_guest_users into RoomMemberHandler (since it calls out to that handler anyway), and split maybe_kick_guest_users into the two places it is called.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

(#10566)

* Allow room creator to send MSC2716 related events in existing room versions

Discussed at https://github.com/matrix-org/matrix-doc/pull/2716/#discussion_r682474869

Restoring `get_create_event_for_room_txn` from,

https://github.com/matrix-org/synapse/pull/10245/commits/44bb3f0cf5cb365ef9281554daceeecfb17cc94d

* Add changelog

* Stop people from trying to redact MSC2716 events in unsupported room versions

* Populate rooms.creator column for easy lookup

> From some [out of band discussion](https://matrix.to/#/!UytJQHLQYfvYWsGrGY:jki.re/$p2fKESoFst038x6pOOmsY0C49S2gLKMr0jhNMz_JJz0?via=jki.re&via=matrix.org), my plan is to use `rooms.creator`. But currently, we don't fill in `creator` for remote rooms when a user is invited to a room for example. So we need to add some code to fill in `creator` wherever we add to the `rooms` table. And also add a background update to fill in the rows missing `creator` (we can use the same logic that `get_create_event_for_room_txn` is doing by looking in the state events to get the `creator`).

>

> https://github.com/matrix-org/synapse/pull/10566#issuecomment-901616642

* Remove and switch away from get_create_event_for_room_txn

* Fix no create event being found because no state events persisted yet

* Fix and add tests for rooms creator bg update

* Populate rooms.creator field for easy lookup

Part of https://github.com/matrix-org/synapse/pull/10566

- Fill in creator whenever we insert into the rooms table

- Add background update to backfill any missing creator values

* Add changelog

* Fix usage

* Remove extra delta already included in #10697

* Don't worry about setting creator for invite

* Only iterate over rows missing the creator

See https://github.com/matrix-org/synapse/pull/10697#discussion_r695940898

* Use constant to fetch room creator field

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696803029

* More protection from other random types

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696806853

* Move new background update to end of list

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696814181

* Fix query casing

* Fix ambiguity iterating over cursor instead of list

Fix `psycopg2.ProgrammingError: no results to fetch` error

when tests run with Postgres.

```

SYNAPSE_POSTGRES=1 SYNAPSE_TEST_LOG_LEVEL=INFO python -m twisted.trial tests.storage.databases.main.test_room

```

---

We use `txn.fetchall` because it will return the results as a

list or an empty list when there are no results.

Docs:

> `cursor` objects are iterable, so, instead of calling explicitly fetchone() in a loop, the object itself can be used:

>

> https://www.psycopg.org/docs/cursor.html#cursor-iterable

And I'm guessing iterating over a raw cursor does something weird when there are no results.

---

Test CI failure: https://github.com/matrix-org/synapse/pull/10697/checks?check_run_id=3468916530

```

tests.test_visibility.FilterEventsForServerTestCase.test_large_room

===============================================================================

[FAIL]

Traceback (most recent call last):

File "/home/runner/work/synapse/synapse/tests/storage/databases/main/test_room.py", line 85, in test_background_populate_rooms_creator_column

self.get_success(

File "/home/runner/work/synapse/synapse/tests/unittest.py", line 500, in get_success

return self.successResultOf(d)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/trial/_synctest.py", line 700, in successResultOf

self.fail(

twisted.trial.unittest.FailTest: Success result expected on <Deferred at 0x7f4022f3eb50 current result: None>, found failure result instead:

Traceback (most recent call last):

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 701, in errback

self._startRunCallbacks(fail)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 764, in _startRunCallbacks

self._runCallbacks()

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 858, in _runCallbacks

current.result = callback( # type: ignore[misc]

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 1751, in gotResult

current_context.run(_inlineCallbacks, r, gen, status)

--- <exception caught here> ---

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 1657, in _inlineCallbacks

result = current_context.run(

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/python/failure.py", line 500, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/runner/work/synapse/synapse/synapse/storage/background_updates.py", line 224, in do_next_background_update

await self._do_background_update(desired_duration_ms)

File "/home/runner/work/synapse/synapse/synapse/storage/background_updates.py", line 261, in _do_background_update

items_updated = await update_handler(progress, batch_size)

File "/home/runner/work/synapse/synapse/synapse/storage/databases/main/room.py", line 1399, in _background_populate_rooms_creator_column

end = await self.db_pool.runInteraction(

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 686, in runInteraction

result = await self.runWithConnection(

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 791, in runWithConnection

return await make_deferred_yieldable(

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 858, in _runCallbacks

current.result = callback( # type: ignore[misc]

File "/home/runner/work/synapse/synapse/tests/server.py", line 425, in <lambda>

d.addCallback(lambda x: function(*args, **kwargs))

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/enterprise/adbapi.py", line 293, in _runWithConnection

compat.reraise(excValue, excTraceback)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/python/deprecate.py", line 298, in deprecatedFunction

return function(*args, **kwargs)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/python/compat.py", line 404, in reraise

raise exception.with_traceback(traceback)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/enterprise/adbapi.py", line 284, in _runWithConnection

result = func(conn, *args, **kw)

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 786, in inner_func

return func(db_conn, *args, **kwargs)

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 554, in new_transaction

r = func(cursor, *args, **kwargs)

File "/home/runner/work/synapse/synapse/synapse/storage/databases/main/room.py", line 1375, in _background_populate_rooms_creator_column_txn

for room_id, event_json in txn:

psycopg2.ProgrammingError: no results to fetch

```

* Move code not under the MSC2716 room version underneath an experimental config option

See https://github.com/matrix-org/synapse/pull/10566#issuecomment-906437909

* Add ordering to rooms creator background update

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696815277

* Add comment to better document constant

See https://github.com/matrix-org/synapse/pull/10697#discussion_r699674458

* Use constant field

|

|

|

The idea here is to take anything to do with incoming events and move it out to a separate handler, as a way of making FederationHandler smaller.

|