| Commit message (Collapse) | Author | Age | Files | Lines |

|---|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Previously, when creating a join event in /make_join, we would decide

whether to include additional fields to satisfy restricted room checks

based on the current state of the room. Then, when building the event,

we would capture the forward extremities of the room to use as prev

events.

This is subject to race conditions. For example, when leaving and

rejoining a room, the following sequence of events leads to a misleading

403 response:

1. /make_join reads the current state of the room and sees that the user

is still in the room. It decides to omit the field required for

restricted room joins.

2. The leave event is persisted and the room's forward extremities are

updated.

3. /make_join builds the event, using the post-leave forward extremities.

The event then fails the restricted room checks.

To mitigate the race, we move the read of the forward extremities closer

to the read of the current state. Ideally, we would compute the state

based off the chosen prev events, but that can involve state resolution,

which is expensive.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Fix order of partial state tables when purging

`partial_state_rooms` has an FK on `events` pointing to the join event we

get from `/send_join`, so we must delete from that table before deleting

from `events`.

**NB:** It would be nice to cancel any resync processes for the room

being purged. We do not do this at present. To do so reliably we'd need

an internal HTTP "replication" endpoint, because the worker doing the

resync process may be different to that handling the purge request.

The first time the resync process tries to write data after the deletion

it will fail because we have deleted necessary data e.g. auth

events. AFAICS it will not retry the resync, so the only downside to

not cancelling the resync is a scary-looking traceback.

(This is presumably extremely race-sensitive.)

* Changelog

* admist(?) -> between

* Warn about a race

* Fix typo, thanks Sean

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

---------

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Fixes #12801.

Complement tests are at

https://github.com/matrix-org/complement/pull/567.

Avoid blocking on full state when handling a subsequent join into a

partial state room.

Also always perform a remote join into partial state rooms, since we do

not know whether the joining user has been banned and want to avoid

leaking history to banned users.

Signed-off-by: Mathieu Velten <mathieuv@matrix.org>

Co-authored-by: Sean Quah <seanq@matrix.org>

Co-authored-by: David Robertson <davidr@element.io>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

state groups (#14675)

* add class UnpersistedEventContext

* modify create new client event to create unpersistedeventcontexts

* persist event contexts after creation

* fix tests to persist unpersisted event contexts

* cleanup

* misc lints + cleanup

* changelog + fix comments

* lints

* fix batch insertion?

* reduce redundant calculation

* add unpersisted event classes

* rework compute_event_context, split into function that returns unpersisted event context and then persists it

* use calculate_context_info to create unpersisted event contexts

* update typing

* $%#^&*

* black

* fix comments and consolidate classes, use attr.s for class

* requested changes

* lint

* requested changes

* requested changes

* refactor to be stupidly explicit

* clearer renaming and flow

* make partial state non-optional

* update docstrings

---------

Co-authored-by: Erik Johnston <erik@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

| |

Ensure that the list of servers in a partial state room always contains

the server we joined off.

Also refactor `get_partial_state_servers_at_join` to return `None` when

the given room is no longer partial stated, to explicitly indicate when

the room has partial state. Otherwise it's not clear whether an empty

list means that the room has full state, or the room is partial stated,

but the server we joined off told us that there are no servers in the

room.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

| |

Due to the increased safety of StrCollection over Collection[str]

and Sequence[str].

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

(#14870)

* Allow `AbstractSet` in `StrCollection`

Or else frozensets are excluded. This will be useful in an upcoming

commit where I plan to change a function that accepts `List[str]` to

accept `StrCollection` instead.

* `rooms_to_exclude` -> `rooms_to_exclude_globally`

I am about to make use of this exclusion mechanism to exclude rooms for

a specific user and a specific sync. This rename helps to clarify the

distinction between the global config and the rooms to exclude for a

specific sync.

* Better function names for internal sync methods

* Track a list of excluded rooms on SyncResultBuilder

I plan to feed a list of partially stated rooms for this sync to ignore

* Exclude partial state rooms during eager sync

using the mechanism established in the previous commit

* Track un-partial-state stream in sync tokens

So that we can work out which rooms have become fully-stated during a

given sync period.

* Fix mutation of `@cached` return value

This was fouling up a complement test added alongside this PR.

Excluding a room would mean the set of forgotten rooms in the cache

would be extended. This means that room could be erroneously considered

forgotten in the future.

Introduced in #12310, Synapse 1.57.0. I don't think this had any

user-visible side effects (until now).

* SyncResultBuilder: track rooms to force as newly joined

Similar plan as before. We've omitted rooms from certain sync responses;

now we establish the mechanism to reintroduce them into future syncs.

* Read new field, to present rooms as newly joined

* Force un-partial-stated rooms to be newly-joined

for eager incremental syncs only, provided they're still fully stated

* Notify user stream listeners to wake up long polling syncs

* Changelog

* Typo fix

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

* Unnecessary list cast

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

* Rephrase comment

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

* Another comment

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

* Fixup merge(?)

* Poke notifier when receiving un-partial-stated msg over replication

* Fixup merge whoops

Thanks MV :)

Co-authored-by: Mathieu Velen <mathieuv@matrix.org>

Co-authored-by: Mathieu Velten <mathieuv@matrix.org>

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

finishing join (#14874)

* Faster joins: Update room stats and user directory on workers when done

When finishing a partial state join to a room, we update the current

state of the room without persisting additional events. Workers receive

notice of the current state update over replication, but neglect to wake

the room stats and user directory updaters, which then get incidentally

triggered the next time an event is persisted or an unrelated event

persister sends out a stream position update.

We wake the room stats and user directory updaters at the appropriate

time in this commit.

Part of #12814 and #12815.

Signed-off-by: Sean Quah <seanq@matrix.org>

* fixup comment

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Enable Complement tests for Faster Remote Room Joins on worker-mode

* (dangerous) Add an override to allow Complement to use FRRJ under workers

* Newsfile

Signed-off-by: Olivier Wilkinson (reivilibre) <oliverw@matrix.org>

* Fix race where we didn't send out replication notification

* MORE HACKS

* Fix get_un_partial_stated_rooms_token to take instance_name

* Fix bad merge

* Remove warning

* Correctly advance un_partial_stated_room_stream

* Fix merge

* Add another notify_replication

* Fixups

* Create a separate ReplicationNotifier

* Fix test

* Fix portdb

* Create a separate ReplicationNotifier

* Fix test

* Fix portdb

* Fix presence test

* Newsfile

* Apply suggestions from code review

* Update changelog.d/14752.misc

Co-authored-by: Erik Johnston <erik@matrix.org>

* lint

Signed-off-by: Olivier Wilkinson (reivilibre) <oliverw@matrix.org>

Co-authored-by: Erik Johnston <erik@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Avoid clearing out forward extremities when doing a second remote join

When joining a restricted room where the local homeserver does not have

a user able to issue invites, we perform a second remote join. We want

to avoid clearing out forward extremities in this case because the

forward extremities we have are up to date and clearing out forward

extremities creates a window in which the room can get bricked if

Synapse crashes.

Signed-off-by: Sean Quah <seanq@matrix.org>

* Do a full join when doing a second remote join into a full state room

We cannot persist a partial state join event into a joined full state

room, so we perform a full state join for such rooms instead. As a

future optimization, we could always perform a partial state join and

compute or retrieve the full state ourselves if necessary.

Signed-off-by: Sean Quah <seanq@matrix.org>

* Add lock around partial state flag for rooms

Signed-off-by: Sean Quah <seanq@matrix.org>

* Preserve partial state info when doing a second partial state join

Signed-off-by: Sean Quah <seanq@matrix.org>

* Add newsfile

* Add a TODO(faster_joins) marker

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Currently, we will try to start a new partial state sync every time we

perform a remote join, which is undesirable if there is already one

running for a given room.

We intend to perform remote joins whenever additional local users wish

to join a partial state room, so let's ensure that we do not start more

than one concurrent partial state sync for any given room.

------------------------------------------------------------------------

There is a race condition where the homeserver leaves a room and later

rejoins while the partial state sync from the previous membership is

still running. There is no guarantee that the previous partial state

sync will process the latest join, so we restart it if needed.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

| |

Then adapts calling code to retry when needed so it doesn't 500

to clients.

Signed-off-by: Mathieu Velten <mathieuv@matrix.org>

Co-authored-by: Sean Quah <8349537+squahtx@users.noreply.github.com>

|

| |

|

|

|

| |

* Move `StateFilter` to `synapse.types`

* Changelog

|

| |

|

|

| |

replication. [rei:frrj/streams/unpsr] (#14473)

|

| |

|

|

|

|

| |

state (#14404)

Signed-off-by: Mathieu Velten <mathieuv@matrix.org>

|

| |

|

|

|

|

|

| |

Remove type hints from comments which have been added

as Python type hints. This helps avoid drift between comments

and reality, as well as removing redundant information.

Also adds some missing type hints which were simple to fill in.

|

| |

|

|

|

|

|

|

|

| |

from `destination` pattern (#14096)

1. `federation_client.timestamp_to_event(...)` now handles all `destination` looping and uses our generic `_try_destination_list(...)` helper.

2. Consistently handling `NotRetryingDestination` and `FederationDeniedError` across `get_pdu` , backfill, and the generic `_try_destination_list` which is used for many places we use this pattern.

3. `get_pdu(...)` now returns `PulledPduInfo` so we know which `destination` we ended up pulling the PDU from

|

| |

|

|

| |

creating a new room. (#14228)

|

| | |

|

| |

|

|

| |

a partial join (#14126)

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

invalid (#13816)

While https://github.com/matrix-org/synapse/pull/13635 stops us from doing the slow thing after we've already done it once, this PR stops us from doing one of the slow things in the first place.

Related to

- https://github.com/matrix-org/synapse/issues/13622

- https://github.com/matrix-org/synapse/pull/13635

- https://github.com/matrix-org/synapse/issues/13676

Part of https://github.com/matrix-org/synapse/issues/13356

Follow-up to https://github.com/matrix-org/synapse/pull/13815 which tracks event signature failures.

With this PR, we avoid the call to the costly `_get_state_ids_after_missing_prev_event` because the signature failure will count as an attempt before and we filter events based on the backoff before calling `_get_state_ids_after_missing_prev_event` now.

For example, this will save us 156s out of the 185s total that this `matrix.org` `/messages` request. If you want to see the full Jaeger trace of this, you can drag and drop this `trace.json` into your own Jaeger, https://gist.github.com/MadLittleMods/4b12d0d0afe88c2f65ffcc907306b761

To explain this exact scenario around `/messages` -> backfill, we call `/backfill` and first check the signatures of the 100 events. We see bad signature for `$luA4l7QHhf_jadH3mI-AyFqho0U2Q-IXXUbGSMq6h6M` and `$zuOn2Rd2vsC7SUia3Hp3r6JSkSFKcc5j3QTTqW_0jDw` (both member events). Then we process the 98 events remaining that have valid signatures but one of the events references `$luA4l7QHhf_jadH3mI-AyFqho0U2Q-IXXUbGSMq6h6M` as a `prev_event`. So we have to do the whole `_get_state_ids_after_missing_prev_event` rigmarole which pulls in those same events which fail again because the signatures are still invalid.

- `backfill`

- `outgoing-federation-request` `/backfill`

- `_check_sigs_and_hash_and_fetch`

- `_check_sigs_and_hash_and_fetch_one` for each event received over backfill

- ❗ `$luA4l7QHhf_jadH3mI-AyFqho0U2Q-IXXUbGSMq6h6M` fails with `Signature on retrieved event was invalid.`: `unable to verify signature for sender domain xxx: 401: Failed to find any key to satisfy: _FetchKeyRequest(...)`

- ❗ `$zuOn2Rd2vsC7SUia3Hp3r6JSkSFKcc5j3QTTqW_0jDw` fails with `Signature on retrieved event was invalid.`: `unable to verify signature for sender domain xxx: 401: Failed to find any key to satisfy: _FetchKeyRequest(...)`

- `_process_pulled_events`

- `_process_pulled_event` for each validated event

- ❗ Event `$Q0iMdqtz3IJYfZQU2Xk2WjB5NDF8Gg8cFSYYyKQgKJ0` references `$luA4l7QHhf_jadH3mI-AyFqho0U2Q-IXXUbGSMq6h6M` as a `prev_event` which is missing so we try to get it

- `_get_state_ids_after_missing_prev_event`

- `outgoing-federation-request` `/state_ids`

- ❗ `get_pdu` for `$luA4l7QHhf_jadH3mI-AyFqho0U2Q-IXXUbGSMq6h6M` which fails the signature check again

- ❗ `get_pdu` for `$zuOn2Rd2vsC7SUia3Hp3r6JSkSFKcc5j3QTTqW_0jDw` which fails the signature check

|

| |

|

|

| |

`knock_state_events` -> `knock_room_state` (#14102)

|

| |

|

|

|

| |

Fixes #13942. Introduced in #13575.

Basically, let's only get the ordered set of hosts out of the DB if we need an ordered set of hosts. Since we split the function up the caching won't be as good, but I think it will still be fine as e.g. multiple backfill requests for the same room will hit the cache.

|

| |

|

| |

Co-authored-by: Eric Eastwood <erice@element.io>

|

| |

|

|

|

|

|

|

|

| |

There is no need to grab thousands of backfill points when we only need 5 to make the `/backfill` request with. We need to grab a few extra in case the first few aren't visible in the history.

Previously, we grabbed thousands of backfill points from the database, then sorted and filtered them in the app. Fetching the 4.6k backfill points for `#matrix:matrix.org` from the database takes ~50ms - ~570ms so it's not like this saves a lot of time 🤷. But it might save us more time now that `get_backfill_points_in_room`/`get_insertion_event_backward_extremities_in_room` are more complicated after https://github.com/matrix-org/synapse/pull/13635

This PR moves the filtering and limiting to the SQL query so we just have less data to work with in the first place.

Part of https://github.com/matrix-org/synapse/issues/13356

|

| |

|

|

|

|

|

| |

c.f. #12993 (comment), point 3

This stores all device list updates that we receive while partial joins are ongoing, and processes them once we have the full state.

Note: We don't actually process the device lists in the same ways as if we weren't partially joined. Instead of updating the device list remote cache, we simply notify local users that a change in the remote user's devices has happened. I think this is safe as if the local user requests the keys for the remote user and we don't have them we'll simply fetch them as normal.

|

| | |

|

| | |

|

| |

|

|

|

|

|

|

|

|

| |

Only try to backfill event if we haven't tried before recently (exponential backoff). No need to keep trying the same backfill point that fails over and over.

Fix https://github.com/matrix-org/synapse/issues/13622

Fix https://github.com/matrix-org/synapse/issues/8451

Follow-up to https://github.com/matrix-org/synapse/pull/13589

Part of https://github.com/matrix-org/synapse/issues/13356

|

| |

|

|

| |

authorise them if they query a room which has partial state on our server. (#13823)

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Optimize how we calculate `likely_domains` during backfill because I've seen this take 17s in production just to `get_current_state` which is used to `get_domains_from_state` (see case [*2. Loading tons of events* in the `/messages` investigation issue](https://github.com/matrix-org/synapse/issues/13356)).

There are 3 ways we currently calculate hosts that are in the room:

1. `get_current_state` -> `get_domains_from_state`

- Used in `backfill` to calculate `likely_domains` and `/timestamp_to_event` because it was cargo-culted from `backfill`

- This one is being eliminated in favor of `get_current_hosts_in_room` in this PR 🕳

1. `get_current_hosts_in_room`

- Used for other federation things like sending read receipts and typing indicators

1. `get_hosts_in_room_at_events`

- Used when pushing out events over federation to other servers in the `_process_event_queue_loop`

Fix https://github.com/matrix-org/synapse/issues/13626

Part of https://github.com/matrix-org/synapse/issues/13356

Mentioned in [internal doc](https://docs.google.com/document/d/1lvUoVfYUiy6UaHB6Rb4HicjaJAU40-APue9Q4vzuW3c/edit#bookmark=id.2tvwz3yhcafh)

### Query performance

#### Before

The query from `get_current_state` sucks just because we have to get all 80k events. And we see almost the exact same performance locally trying to get all of these events (16s vs 17s):

```

synapse=# SELECT type, state_key, event_id FROM current_state_events WHERE room_id = '!OGEhHVWSdvArJzumhm:matrix.org';

Time: 16035.612 ms (00:16.036)

synapse=# SELECT type, state_key, event_id FROM current_state_events WHERE room_id = '!OGEhHVWSdvArJzumhm:matrix.org';

Time: 4243.237 ms (00:04.243)

```

But what about `get_current_hosts_in_room`: When there is 8M rows in the `current_state_events` table, the previous query in `get_current_hosts_in_room` took 13s from complete freshness (when the events were first added). But takes 930ms after a Postgres restart or 390ms if running back to back to back.

```sh

$ psql synapse

synapse=# \timing on

synapse=# SELECT COUNT(DISTINCT substring(state_key FROM '@[^:]*:(.*)$'))

FROM current_state_events

WHERE

type = 'm.room.member'

AND membership = 'join'

AND room_id = '!OGEhHVWSdvArJzumhm:matrix.org';

count

-------

4130

(1 row)

Time: 13181.598 ms (00:13.182)

synapse=# SELECT COUNT(*) from current_state_events where room_id = '!OGEhHVWSdvArJzumhm:matrix.org';

count

-------

80814

synapse=# SELECT COUNT(*) from current_state_events;

count

---------

8162847

synapse=# SELECT pg_size_pretty( pg_total_relation_size('current_state_events') );

pg_size_pretty

----------------

4702 MB

```

#### After

I'm not sure how long it takes from complete freshness as I only really get that opportunity once (maybe restarting computer but that's cumbersome) and it's not really relevant to normal operating times. Maybe you get closer to the fresh times the more access variability there is so that Postgres caches aren't as exact. Update: The longest I've seen this run for is 6.4s and 4.5s after a computer restart.

After a Postgres restart, it takes 330ms and running back to back takes 260ms.

```sh

$ psql synapse

synapse=# \timing on

Timing is on.

synapse=# SELECT

substring(c.state_key FROM '@[^:]*:(.*)$') as host

FROM current_state_events c

/* Get the depth of the event from the events table */

INNER JOIN events AS e USING (event_id)

WHERE

c.type = 'm.room.member'

AND c.membership = 'join'

AND c.room_id = '!OGEhHVWSdvArJzumhm:matrix.org'

GROUP BY host

ORDER BY min(e.depth) ASC;

Time: 333.800 ms

```

#### Going further

To improve things further we could add a `limit` parameter to `get_current_hosts_in_room`. Realistically, we don't need 4k domains to choose from because there is no way we're going to query that many before we a) probably get an answer or b) we give up.

Another thing we can do is optimize the query to use a index skip scan:

- https://wiki.postgresql.org/wiki/Loose_indexscan

- Index Skip Scan, https://commitfest.postgresql.org/37/1741/

- https://www.timescale.com/blog/how-we-made-distinct-queries-up-to-8000x-faster-on-postgresql/

|

| |

|

|

|

|

|

| |

Fix:

- https://github.com/matrix-org/synapse/pull/13535#discussion_r949582508

- https://github.com/matrix-org/synapse/pull/13533#discussion_r949577244

|

| | |

|

| |

|

|

|

|

|

|

|

| |

Instrument the federation/backfill part of `/messages` so it's easier to follow what's going on in Jaeger when viewing a trace.

Split out from https://github.com/matrix-org/synapse/pull/13440

Follow-up from https://github.com/matrix-org/synapse/pull/13368

Part of https://github.com/matrix-org/synapse/issues/13356

|

| |

|

| |

Instrument FederationStateIdsServlet - `/state_ids` so it's easier to follow what's going on in Jaeger when viewing a trace.

|

| |

|

|

| |

for a room which it has not fully joined yet. (#13416)

|

| |

|

|

|

|

| |

In Jaeger:

- Before: huge list of uncategorized database calls

- After: nice and collapsible into units of work

|

| |

|

|

|

| |

so that we raise the intended error instead.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

| |

fails. (#13403)

|

| |

|

|

|

|

| |

return `Tuple[Codes, dict]` (#13044)

Signed-off-by: David Teller <davidt@element.io>

Co-authored-by: Brendan Abolivier <babolivier@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

| |

Bounce recalculation of current state to the correct event persister and

move recalculation of current state into the event persistence queue, to

avoid concurrent updates to a room's current state.

Also give recalculation of a room's current state a real stream

ordering.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Whenever we want to persist an event, we first compute an event context,

which includes the state at the event and a flag indicating whether the

state is partial. After a lot of processing, we finally try to store the

event in the database, which can fail for partial state events when the

containing room has been un-partial stated in the meantime.

We detect the race as a foreign key constraint failure in the data store

layer and turn it into a special `PartialStateConflictError` exception,

which makes its way up to the method in which we computed the event

context.

To make things difficult, the exception needs to cross a replication

request: `/fed_send_events` for events coming over federation and

`/send_event` for events from clients. We transport the

`PartialStateConflictError` as a `409 Conflict` over replication and

turn `409`s back into `PartialStateConflictError`s on the worker making

the request.

All client events go through

`EventCreationHandler.handle_new_client_event`, which is called in

*a lot* of places. Instead of trying to update all the code which

creates client events, we turn the `PartialStateConflictError` into a

`429 Too Many Requests` in

`EventCreationHandler.handle_new_client_event` and hope that clients

take it as a hint to retry their request.

On the federation event side, there are 7 places which compute event

contexts. 4 of them use outlier event contexts:

`FederationEventHandler._auth_and_persist_outliers_inner`,

`FederationHandler.do_knock`, `FederationHandler.on_invite_request` and

`FederationHandler.do_remotely_reject_invite`. These events won't have

the partial state flag, so we do not need to do anything for then.

The remaining 3 paths which create events are

`FederationEventHandler.process_remote_join`,

`FederationEventHandler.on_send_membership_event` and

`FederationEventHandler._process_received_pdu`.

We can't experience the race in `process_remote_join`, unless we're

handling an additional join into a partial state room, which currently

blocks, so we make no attempt to handle it correctly.

`on_send_membership_event` is only called by

`FederationServer._on_send_membership_event`, so we catch the

`PartialStateConflictError` there and retry just once.

`_process_received_pdu` is called by `on_receive_pdu` for incoming

events and `_process_pulled_event` for backfill. The latter should never

try to persist partial state events, so we ignore it. We catch the

`PartialStateConflictError` in `on_receive_pdu` and retry just once.

Refering to the graph of code paths in

https://github.com/matrix-org/synapse/issues/12988#issuecomment-1156857648

may make the above make more sense.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

| |

return `Union[Allow, Codes]`. (#12857)

Co-authored-by: Brendan Abolivier <babolivier@matrix.org>

|

| |\ |

|

| | |

| |

| |

| | |

It's now implied by the room_version property on the event.

|

| | |

| |

| |

| |

| |

| |

| | |

Instead, use the `room_version` property of the event we're validating.

The `room_version` was originally added as a parameter somewhere around #4482,

but really it's been redundant since #6875 added a `room_version` field to `EventBase`.

|

| |/

|

|

| |

... to help us keep track of these things

|

| | |

|

| | |

|

| |

|

|

| |

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

| |

partial-state room (#12812)

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| | |

|

| | |

|

| |

|

|

| |

accept state filters and update calls where possible (#12791)

|

| |

|

|

|

|

|

|

|

|

| |

Refactor how the `EventContext` class works, with the intention of reducing the amount of state we fetch from the DB during event processing.

The idea here is to get rid of the cached `current_state_ids` and `prev_state_ids` that live in the `EventContext`, and instead defer straight to the database (and its caching).

One change that may have a noticeable effect is that we now no longer prefill the `get_current_state_ids` cache on a state change. However, that query is relatively light, since its just a case of reading a table from the DB (unlike fetching state at an event which is more heavyweight). For deployments with workers this cache isn't even used.

Part of #12684

|

| | |

|

| |

|

|

|

|

| |

Try to avoid an OOM by checking fewer extremities.

Generally this is a big rewrite of _maybe_backfill, to try and fix some of the TODOs and other problems in it. It's best reviewed commit-by-commit.

|

| |

|

|

|

| |

We work through all the events with partial state, updating the state at each

of them. Once it's done, we recalculate the state for the whole room, and then

mark the room as having complete state.

|

| |

|

|

|

|

|

|

|

|

|

| |

Refactor and convert `Linearizer` to async. This makes a `Linearizer`

cancellation bug easier to fix.

Also refactor to use an async context manager, which eliminates an

unlikely footgun where code that doesn't immediately use the context

manager could forget to release the lock.

Signed-off-by: Sean Quah <seanq@element.io>

|

| |

|

|

|

| |

* Replace `get_state_for_pdu` with `get_state_ids_for_pdu` and `get_events_as_list`.

* Return a 404 from `/state` and `/state_ids` for an outlier

|

| |

|

|

| |

We don't *have* the state at a backwards-extremity, so this is never going to

do anything useful.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

When we get a partial_state response from send_join, store information in the

database about it:

* store a record about the room as a whole having partial state, and stash the

list of member servers too.

* flag the join event itself as having partial state

* also, for any new events whose prev-events are partial-stated, note that

they will *also* be partial-stated.

We don't yet make any attempt to interpret this data, so API calls (and a bunch

of other things) are just going to get incorrect data.

|

| |

|

|

|

|

|

| |

The presence of this method was confusing, and mostly present for backwards

compatibility. Let's get rid of it.

Part of #11733

|

| |

|

| |

... to ensure it gets a proper log context, mostly.

|

| |

|

| |

A follow-up to #12005, in which I apparently missed that there are a bunch of other places that assume the create event is in the auth chain.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

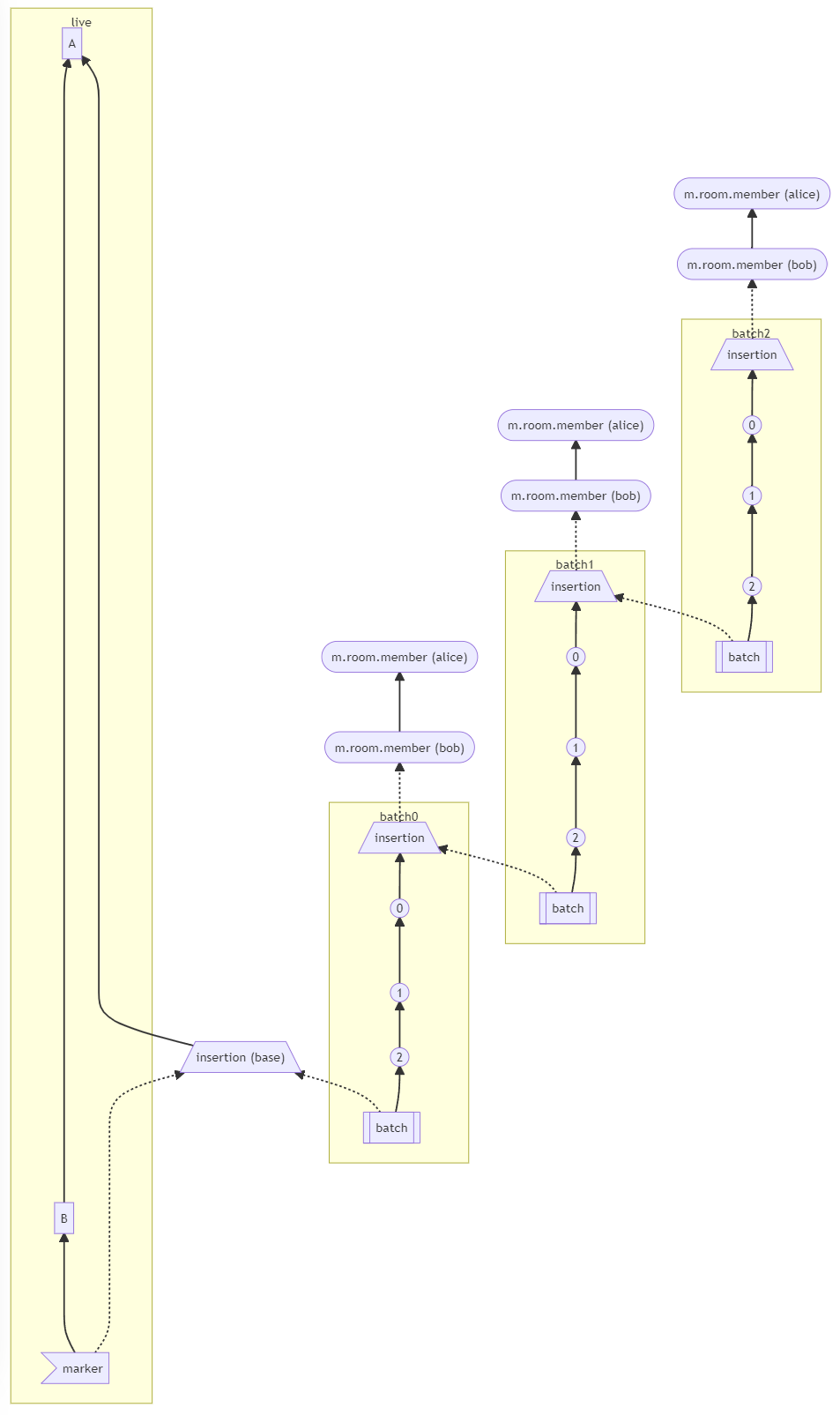

(MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

|

| |

|

|

|

|

|

| |

I've never found this terribly useful. I think it was added in the early days

of Synapse, without much thought as to what would actually be useful to log,

and has just been cargo-culted ever since.

Rather, it tends to clutter up debug logs with useless information.

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

closest event to a given timestamp (#9445)

MSC3030: https://github.com/matrix-org/matrix-doc/pull/3030

Client API endpoint. This will also go and fetch from the federation API endpoint if unable to find an event locally or we found an extremity with possibly a closer event we don't know about.

```

GET /_matrix/client/unstable/org.matrix.msc3030/rooms/<roomID>/timestamp_to_event?ts=<timestamp>&dir=<direction>

{

"event_id": ...

"origin_server_ts": ...

}

```

Federation API endpoint:

```

GET /_matrix/federation/unstable/org.matrix.msc3030/timestamp_to_event/<roomID>?ts=<timestamp>&dir=<direction>

{

"event_id": ...

"origin_server_ts": ...

}

```

Co-authored-by: Erik Johnston <erik@matrix.org>

|

| |

|

|

|

| |

This is just a lift-and-shift, because it fits more naturally here. We do

rename it to `process_remote_join` at the same time though.

|

| |

|

|

|

|

|

|

|

|

|

| |

This fixes a bug where we would accept an event whose `auth_events` include

rejected events, if the rejected event was shadowed by another `auth_event`

with same `(type, state_key)`.

The approach is to pass a list of auth events into

`check_auth_rules_for_event` instead of a dict, which of course means updating

the call sites.

This is an extension of #10956.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

extremities (#11027)

Found while working on the Gitter backfill script and noticed

it only happened after we sent 7 batches, https://gitlab.com/gitterHQ/webapp/-/merge_requests/2229#note_665906390

When there are more than 5 backward extremities for a given depth,

backfill will throw an error because we sliced the extremity list

to 5 but then try to iterate over the full list. This causes

us to look for state that we never fetched and we get a `KeyError`.

Before when calling `/messages` when there are more than 5 backward extremities:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/synapse/http/server.py", line 258, in _async_render_wrapper

callback_return = await self._async_render(request)

File "/usr/local/lib/python3.8/site-packages/synapse/http/server.py", line 446, in _async_render

callback_return = await raw_callback_return

File "/usr/local/lib/python3.8/site-packages/synapse/rest/client/room.py", line 580, in on_GET

msgs = await self.pagination_handler.get_messages(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/pagination.py", line 396, in get_messages

await self.hs.get_federation_handler().maybe_backfill(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 133, in maybe_backfill

return await self._maybe_backfill_inner(room_id, current_depth, limit)

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 386, in _maybe_backfill_inner

likely_extremeties_domains = get_domains_from_state(states[e_id])

KeyError: '$zpFflMEBtZdgcMQWTakaVItTLMjLFdKcRWUPHbbSZJl'

```

|

| |

|

|

|

|

|

|

| |

The shared ratelimit function was replaced with a dedicated

RequestRatelimiter class (accessible from the HomeServer

object).

Other properties were copied to each sub-class that inherited

from BaseHandler.

|

| |

|

|

|

|

|

| |

it. (#10933)

This fixes a "Event not signed by authorising server" error when

transition room member from join -> join, e.g. when updating a

display name or avatar URL for restricted rooms.

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

Broadly, the existing `event_auth.check` function has two parts:

* a validation section: checks that the event isn't too big, that it has the rught signatures, etc.

This bit is independent of the rest of the state in the room, and so need only be done once

for each event.

* an auth section: ensures that the event is allowed, given the rest of the state in the room.

This gets done multiple times, against various sets of room state, because it forms part of

the state res algorithm.

Currently, this is implemented with `do_sig_check` and `do_size_check` parameters, but I think

that makes everything hard to follow. Instead, we split the function in two and call each part

separately where it is needed.

|

| | |

|

| |

|

| |

Various refactors to use `RoomVersion` objects instead of room version identifiers.

|

| | |

|

| | |

|

| |

|

|

|

|

| |

Constructing an EventContext for an outlier is actually really simple, and

there's no sense in going via an `async` method in the `StateHandler`.

This also means that we can resolve a bunch of FIXMEs.

|

| | |

|

| |

|

|

|

|

|

| |

Adds missing type hints to methods in the synapse.handlers

module and requires all methods to have type hints there.

This also removes the unused construct_auth_difference method

from the FederationHandler.

|

| |

|

|

| |

Instead of proxying through the magic getter of the RootConfig

object. This should be more performant (and is more explicit).

|

| |

|

|

|

|

| |

Part of https://github.com/matrix-org/synapse/pull/10566

- Fill in creator whenever we insert into the rooms table

- Add background update to backfill any missing creator values

|

| |

|

| |

The idea here is to take anything to do with incoming events and move it out to a separate handler, as a way of making FederationHandler smaller.

|

| |

|

| |

Given that backfill and get_missing_events are basically the same thing, it's somewhat crazy that we have entirely separate code paths for them. This makes backfill use the existing get_missing_events code, and then clears up all the unused code.

|

| |

|

| |

Here we split on_receive_pdu into two functions (on_receive_pdu and process_pulled_event), rather than having both cases in the same method. There's a tiny bit of overlap, but not that much.

|

| |

|

| |

This is a follow-up to #10615: it takes the code that constructs the state at a backwards extremity, and extracts it to a separate method.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* drop room pdu linearizer sooner

No point holding onto it while we recheck the db

* move out `missing_prevs` calculation

we're going to need `missing_prevs` whatever we do, so we may as well calculate

it eagerly and just update it if it gets outdated.

* Add another `if missing_prevs` condition

this should be a no-op, since all the code inside the block already checks `if

missing_prevs`

* reorder if conditions

This shouldn't change the logic at all.

* Push down `min_depth` read

No point reading it from the database unless we're going to use it.

* Collect the sent_to_us_directly code together

Move the remaining `sent_to_us_directly` code inside the `if

sent_to_us_directly` block.

* Properly separate the `not sent_to_us_directly` branch

Since the only way this second block is now reachable is if we

*didn't* go into the `sent_to_us_directly` branch, we can replace it with a

simple `else`.

* changelog

|

| |

|

|

|

| |

Marking things as outliers to inhibit pushes is a sledgehammer to crack a

nut. Move the test further down the stack so that we just inhibit the thing we

want.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Include outlier status in `str(event)`

In places where we log event objects, knowing whether or not you're dealing

with an outlier is super useful.

* Remove duplicated logging in get_missing_events

When we process events received from get_missing_events, we log them twice

(once in `_get_missing_events_for_pdu`, and once in `on_receive_pdu`). Reduce

the duplication by removing the logging in `on_receive_pdu`, and ensuring the

call sites do sensible logging.

* log in `on_receive_pdu` when we already have the event

* Log which prev_events we are missing

* changelog

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* drop old-room hack

pretty sure we don't need this any more.

* Remove incorrect comment about modifying `context`

It doesn't look like the supplied context is ever modified.

* Stop `_auth_and_persist_event` modifying its parameters

This is only called in three places. Two of them don't pass `auth_events`, and

the third doesn't use the dict after passing it in, so this should be non-functional.

* Stop `_check_event_auth` modifying its parameters

`_check_event_auth` is only called in three places. `on_send_membership_event`

doesn't pass an `auth_events`, and `prep` and `_auth_and_persist_event` do not

use the map after passing it in.

* Stop `_update_auth_events_and_context_for_auth` modifying its parameters

Return the updated auth event dict, rather than modifying the parameter.

This is only called from `_check_event_auth`.

* Improve documentation on `_auth_and_persist_event`

Rename `auth_events` parameter to better reflect what it contains.

* Improve documentation on `_NewEventInfo`

* Improve documentation on `_check_event_auth`

rename `auth_events` parameter to better describe what it contains

* changelog

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Make historical messages available to federated servers

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

Follow-up to https://github.com/matrix-org/synapse/pull/9247

* Debug message not available on federation

* Add base starting insertion point when no chunk ID is provided

* Fix messages from multiple senders in historical chunk

Follow-up to https://github.com/matrix-org/synapse/pull/9247

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

---

Previously, Synapse would throw a 403,

`Cannot force another user to join.`,

because we were trying to use `?user_id` from a single virtual user

which did not match with messages from other users in the chunk.

* Remove debug lines

* Messing with selecting insertion event extremeties

* Move db schema change to new version

* Add more better comments

* Make a fake requester with just what we need

See https://github.com/matrix-org/synapse/pull/10276#discussion_r660999080

* Store insertion events in table

* Make base insertion event float off on its own

See https://github.com/matrix-org/synapse/pull/10250#issuecomment-875711889

Conflicts:

synapse/rest/client/v1/room.py

* Validate that the app service can actually control the given user

See https://github.com/matrix-org/synapse/pull/10276#issuecomment-876316455

Conflicts:

synapse/rest/client/v1/room.py

* Add some better comments on what we're trying to check for

* Continue debugging

* Share validation logic

* Add inserted historical messages to /backfill response

* Remove debug sql queries

* Some marker event implemntation trials

* Clean up PR

* Rename insertion_event_id to just event_id

* Add some better sql comments

* More accurate description

* Add changelog

* Make it clear what MSC the change is part of

* Add more detail on which insertion event came through

* Address review and improve sql queries

* Only use event_id as unique constraint

* Fix test case where insertion event is already in the normal DAG

* Remove debug changes

* Add support for MSC2716 marker events

* Process markers when we receive it over federation

* WIP: make hs2 backfill historical messages after marker event

* hs2 to better ask for insertion event extremity

But running into the `sqlite3.IntegrityError: NOT NULL constraint failed: event_to_state_groups.state_group`

error

* Add insertion_event_extremities table

* Switch to chunk events so we can auth via power_levels

Previously, we were using `content.chunk_id` to connect one

chunk to another. But these events can be from any `sender`

and we can't tell who should be able to send historical events.

We know we only want the application service to do it but these

events have the sender of a real historical message, not the

application service user ID as the sender. Other federated homeservers

also have no indicator which senders are an application service on

the originating homeserver.

So we want to auth all of the MSC2716 events via power_levels

and have them be sent by the application service with proper

PL levels in the room.

* Switch to chunk events for federation

* Add unstable room version to support new historical PL

* Messy: Fix undefined state_group for federated historical events

```

2021-07-13 02:27:57,810 - synapse.handlers.federation - 1248 - ERROR - GET-4 - Failed to backfill from hs1 because NOT NULL constraint failed: event_to_state_groups.state_group

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 1216, in try_backfill

await self.backfill(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 1035, in backfill

await self._auth_and_persist_event(dest, event, context, backfilled=True)

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 2222, in _auth_and_persist_event

await self._run_push_actions_and_persist_event(event, context, backfilled)

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 2244, in _run_push_actions_and_persist_event

await self.persist_events_and_notify(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 3290, in persist_events_and_notify

events, max_stream_token = await self.storage.persistence.persist_events(

File "/usr/local/lib/python3.8/site-packages/synapse/logging/opentracing.py", line 774, in _trace_inner

return await func(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/persist_events.py", line 320, in persist_events

ret_vals = await yieldable_gather_results(enqueue, partitioned.items())

File "/usr/local/lib/python3.8/site-packages/synapse/storage/persist_events.py", line 237, in handle_queue_loop

ret = await self._per_item_callback(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/persist_events.py", line 577, in _persist_event_batch

await self.persist_events_store._persist_events_and_state_updates(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/databases/main/events.py", line 176, in _persist_events_and_state_updates

await self.db_pool.runInteraction(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 681, in runInteraction

result = await self.runWithConnection(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 770, in runWithConnection

return await make_deferred_yieldable(

File "/usr/local/lib/python3.8/site-packages/twisted/python/threadpool.py", line 238, in inContext

result = inContext.theWork() # type: ignore[attr-defined]

File "/usr/local/lib/python3.8/site-packages/twisted/python/threadpool.py", line 254, in <lambda>

inContext.theWork = lambda: context.call( # type: ignore[attr-defined]

File "/usr/local/lib/python3.8/site-packages/twisted/python/context.py", line 118, in callWithContext

return self.currentContext().callWithContext(ctx, func, *args, **kw)

File "/usr/local/lib/python3.8/site-packages/twisted/python/context.py", line 83, in callWithContext

return func(*args, **kw)

File "/usr/local/lib/python3.8/site-packages/twisted/enterprise/adbapi.py", line 293, in _runWithConnection

compat.reraise(excValue, excTraceback)

File "/usr/local/lib/python3.8/site-packages/twisted/python/deprecate.py", line 298, in deprecatedFunction

return function(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/twisted/python/compat.py", line 403, in reraise

raise exception.with_traceback(traceback)

File "/usr/local/lib/python3.8/site-packages/twisted/enterprise/adbapi.py", line 284, in _runWithConnection

result = func(conn, *args, **kw)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 765, in inner_func

return func(db_conn, *args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 549, in new_transaction

r = func(cursor, *args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/logging/utils.py", line 69, in wrapped

return f(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/databases/main/events.py", line 385, in _persist_events_txn

self._store_event_state_mappings_txn(txn, events_and_contexts)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/databases/main/events.py", line 2065, in _store_event_state_mappings_txn

self.db_pool.simple_insert_many_txn(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 923, in simple_insert_many_txn

txn.execute_batch(sql, vals)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 280, in execute_batch

self.executemany(sql, args)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 300, in executemany

self._do_execute(self.txn.executemany, sql, *args)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 330, in _do_execute

return func(sql, *args)

sqlite3.IntegrityError: NOT NULL constraint failed: event_to_state_groups.state_group

```

* Revert "Messy: Fix undefined state_group for federated historical events"

This reverts commit 187ab28611546321e02770944c86f30ee2bc742a.

* Fix federated events being rejected for no state_groups

Add fix from https://github.com/matrix-org/synapse/pull/10439

until it merges.

* Adapting to experimental room version

* Some log cleanup

* Add better comments around extremity fetching code and why

* Rename to be more accurate to what the function returns

* Add changelog

* Ignore rejected events

* Use simplified upsert

* Add Erik's explanation of extra event checks

See https://github.com/matrix-org/synapse/pull/10498#discussion_r680880332

* Clarify that the depth is not directly correlated to the backwards extremity that we return

See https://github.com/matrix-org/synapse/pull/10498#discussion_r681725404

* lock only matters for sqlite

See https://github.com/matrix-org/synapse/pull/10498#discussion_r681728061

* Move new SQL changes to its own delta file

* Clean up upsert docstring

* Bump database schema version (62)

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

scrollback history (MSC2716) (#10245)

* Make historical messages available to federated servers

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

Follow-up to https://github.com/matrix-org/synapse/pull/9247

* Debug message not available on federation

* Add base starting insertion point when no chunk ID is provided

* Fix messages from multiple senders in historical chunk

Follow-up to https://github.com/matrix-org/synapse/pull/9247

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

---

Previously, Synapse would throw a 403,

`Cannot force another user to join.`,

because we were trying to use `?user_id` from a single virtual user

which did not match with messages from other users in the chunk.

* Remove debug lines

* Messing with selecting insertion event extremeties

* Move db schema change to new version

* Add more better comments

* Make a fake requester with just what we need

See https://github.com/matrix-org/synapse/pull/10276#discussion_r660999080

* Store insertion events in table

* Make base insertion event float off on its own

See https://github.com/matrix-org/synapse/pull/10250#issuecomment-875711889

Conflicts:

synapse/rest/client/v1/room.py

* Validate that the app service can actually control the given user

See https://github.com/matrix-org/synapse/pull/10276#issuecomment-876316455

Conflicts:

synapse/rest/client/v1/room.py

* Add some better comments on what we're trying to check for

* Continue debugging

* Share validation logic

* Add inserted historical messages to /backfill response

* Remove debug sql queries

* Some marker event implemntation trials

* Clean up PR

* Rename insertion_event_id to just event_id

* Add some better sql comments

* More accurate description

* Add changelog

* Make it clear what MSC the change is part of

* Add more detail on which insertion event came through

* Address review and improve sql queries

* Only use event_id as unique constraint

* Fix test case where insertion event is already in the normal DAG

* Remove debug changes

* Switch to chunk events so we can auth via power_levels

Previously, we were using `content.chunk_id` to connect one

chunk to another. But these events can be from any `sender`

and we can't tell who should be able to send historical events.

We know we only want the application service to do it but these

events have the sender of a real historical message, not the

application service user ID as the sender. Other federated homeservers

also have no indicator which senders are an application service on

the originating homeserver.

So we want to auth all of the MSC2716 events via power_levels

and have them be sent by the application service with proper

PL levels in the room.

* Switch to chunk events for federation

* Add unstable room version to support new historical PL

* Fix federated events being rejected for no state_groups

Add fix from https://github.com/matrix-org/synapse/pull/10439

until it merges.

* Only connect base insertion event to prev_event_ids

Per discussion with @erikjohnston,

https://matrix.to/#/!UytJQHLQYfvYWsGrGY:jki.re/$12bTUiObDFdHLAYtT7E-BvYRp3k_xv8w0dUQHibasJk?via=jki.re&via=matrix.org

* Make it possible to get the room_version with txn

* Allow but ignore historical events in unsupported room version

See https://github.com/matrix-org/synapse/pull/10245#discussion_r675592489

We can't reject historical events on unsupported room versions because homeservers without knowledge of MSC2716 or the new room version don't reject historical events either.

Since we can't rely on the auth check here to stop historical events on unsupported room versions, I've added some additional checks in the processing/persisting code (`synapse/storage/databases/main/events.py` -> `_handle_insertion_event` and `_handle_chunk_event`). I've had to do some refactoring so there is method to fetch the room version by `txn`.

* Move to unique index syntax

See https://github.com/matrix-org/synapse/pull/10245#discussion_r675638509

* High-level document how the insertion->chunk lookup works

* Remove create_event fallback for room_versions

See https://github.com/matrix-org/synapse/pull/10245/files#r677641879

* Use updated method name

|

| |

|

|

| |

(#10254)

|

| |

|

|

|

| |

(#10386)

Port the third-party event rules interface to the generic module interface introduced in v1.37.0

|

| |

|

|

|

|

|

|

|

| |

This PR is tantamount to running

```

pyupgrade --py36-plus --keep-percent-format `find synapse/ -type f -name "*.py"`

```

Part of #9744

|

| | |

|

| | |

|

| |

|

| |

Instead of mixing them with user authentication methods.

|

| |

|

| |

Rather than persisting rejected events via `send_join` and friends, raise a 403 if someone tries to pull a fast one.

|

| |

|

| |

The idea here is to stop people sending things that aren't joins/leaves/knocks through these endpoints: previously you could send anything you liked through them. I wasn't able to find any security holes from doing so, but it doesn't sound like a good thing.

|

| |

|

|

|

| |

ensure that events sent via `send_leave` and `send_knock` are sent on to

the rest of the federation.

|

| |

|

|

|

| |

An accidental mis-ordering of operations during #6739 technically allowed an incoming knock event over federation in before checking it against any configured Third Party Access Rules modules.

This PR corrects that by performing the TPAR check *before* persisting the event.

|

| |

|

|

|

| |

Reformat all files with the new version.

Signed-off-by: Marcus Hoffmann <bubu@bubu1.eu>

|

| |

|

| |

Follow-up to https://github.com/matrix-org/synapse/pull/10156#discussion_r650292223

|

| |

|

|

|

|

|

| |

endpoints. (#10167)

* Room version 7 for knocking.

* Stable prefixes and endpoints (both client and federation) for knocking.

* Removes the experimental configuration flag.

|

| |

|

|

|

|

|

|

|

|

|

| |

Spawned from missing messages we were seeing on `matrix.org` from a

federated Gtiter bridged room, https://gitlab.com/gitterHQ/webapp/-/issues/2770.

The underlying issue in Synapse is tracked by https://github.com/matrix-org/synapse/issues/10066

where the message and join event race and the message is `soft_failed` before the

`join` event reaches the remote federated server.

Less soft_failed events = better and usually this should only trigger for events

where people are doing bad things and trying to fuzz and fake everything.

|

| |

|

|

|

|

| |

This PR aims to implement the knock feature as proposed in https://github.com/matrix-org/matrix-doc/pull/2403

Signed-off-by: Sorunome mail@sorunome.de

Signed-off-by: Andrew Morgan andrewm@element.io

|

| |

|

| |

Fixes #10123

|

| |

|

|

|

| |

If backfilling is slow then the client may time out and retry, causing

Synapse to start a new `/backfill` before the existing backfill has

finished, duplicating work.

|

| |

|

| |

Fixes #9956.

|

| |

|

| |

Empirically, this helped my server considerably when handling gaps in Matrix HQ. The problem was that we would repeatedly call have_seen_events for the same set of (50K or so) auth_events, each of which would take many minutes to complete, even though it's only an index scan.

|

| |

|

|

| |

(#10082)

|

| |

|

|

|

| |

To be more consistent with similar code. The check now automatically

raises an AuthError instead of passing back a boolean. It also absorbs

some shared logic between callers.

|

| |

|

|

|

|

| |

We were pulling the full auth chain for the room out of the DB each time

we backfilled, which can be *huge* for large rooms and is totally

unnecessary.

|

| | |

|

| |

|

|

|

|

| |

When receiving a /send_join request for a room with join rules set to 'restricted',

check if the user is a member of the spaces defined in the 'allow' key of the join rules.

This only applies to an experimental room version, as defined in MSC3083.

|

| | |

|

| |

|

|

|

|

| |

handler (#9800)

This refactoring allows adding logic that uses the event context

before persisting it.

|

| |

|

|

|

|

|

|

| |

room. (#9763)"

This reverts commit cc51aaaa7adb0ec2235e027b5184ebda9b660ec4.

The PR was prematurely merged and not yet approved.

|

| |

|

|

|

|

|

| |

When receiving a /send_join request for a room with join rules set to 'restricted',

check if the user is a member of the spaces defined in the 'allow' key of the join

rules.

This only applies to an experimental room version, as defined in MSC3083.

|

| |

|

|

|

|

|

| |

Part of #9744

Removes all redundant `# -*- coding: utf-8 -*-` lines from files, as python 3 automatically reads source code as utf-8 now.

`Signed-off-by: Jonathan de Jong <jonathan@automatia.nl>`

|

| |

|

|

|

|

|

| |

Part of #9366

Adds in fixes for B006 and B008, both relating to mutable parameter lint errors.

Signed-off-by: Jonathan de Jong <jonathan@automatia.nl>

|

| | |

|

| |

|

|

|

|

|

| |

This should fix a class of bug where we forget to check if e.g. the appservice shouldn't be ratelimited.

We also check the `ratelimit_override` table to check if the user has ratelimiting disabled. That table is really only meant to override the event sender ratelimiting, so we don't use any values from it (as they might not make sense for different rate limits), but we do infer that if ratelimiting is disabled for the user we should disabled all ratelimits.

Fixes #9663

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Background: When we receive incoming federation traffic, and notice that we are missing prev_events from

the incoming traffic, first we do a `/get_missing_events` request, and then if we still have missing prev_events,

we set up new backwards-extremities. To do that, we need to make a `/state_ids` request to ask the remote

server for the state at those prev_events, and then we may need to then ask the remote server for any events

in that state which we don't already have, as well as the auth events for those missing state events, so that we

can auth them.

This PR attempts to optimise the processing of that state request. The `state_ids` API returns a list of the state