| Commit message (Collapse) | Author | Age | Files | Lines |

|---|

| |

|

|

|

|

|

|

| |

Fix race conditions in the async cache invalidation logic, by separating

the async & local invalidation calls and ensuring any async call i

executed first.

Signed off by Nick @ Beeper (@Fizzadar).

|

| |

|

|

|

| |

Some experimental prep work to enable external event caching based on #9379 & #12955. Doesn't actually move the cache at all, just lays the groundwork for async implemented caches.

Signed off by Nick @ Beeper (@Fizzadar)

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

| |

Bounce recalculation of current state to the correct event persister and

move recalculation of current state into the event persistence queue, to

avoid concurrent updates to a room's current state.

Also give recalculation of a room's current state a real stream

ordering.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

Whenever we want to persist an event, we first compute an event context,

which includes the state at the event and a flag indicating whether the

state is partial. After a lot of processing, we finally try to store the

event in the database, which can fail for partial state events when the

containing room has been un-partial stated in the meantime.

We detect the race as a foreign key constraint failure in the data store

layer and turn it into a special `PartialStateConflictError` exception,

which makes its way up to the method in which we computed the event

context.

To make things difficult, the exception needs to cross a replication

request: `/fed_send_events` for events coming over federation and

`/send_event` for events from clients. We transport the

`PartialStateConflictError` as a `409 Conflict` over replication and

turn `409`s back into `PartialStateConflictError`s on the worker making

the request.

All client events go through

`EventCreationHandler.handle_new_client_event`, which is called in

*a lot* of places. Instead of trying to update all the code which

creates client events, we turn the `PartialStateConflictError` into a

`429 Too Many Requests` in

`EventCreationHandler.handle_new_client_event` and hope that clients

take it as a hint to retry their request.

On the federation event side, there are 7 places which compute event

contexts. 4 of them use outlier event contexts:

`FederationEventHandler._auth_and_persist_outliers_inner`,

`FederationHandler.do_knock`, `FederationHandler.on_invite_request` and

`FederationHandler.do_remotely_reject_invite`. These events won't have

the partial state flag, so we do not need to do anything for then.

The remaining 3 paths which create events are

`FederationEventHandler.process_remote_join`,

`FederationEventHandler.on_send_membership_event` and

`FederationEventHandler._process_received_pdu`.

We can't experience the race in `process_remote_join`, unless we're

handling an additional join into a partial state room, which currently

blocks, so we make no attempt to handle it correctly.

`on_send_membership_event` is only called by

`FederationServer._on_send_membership_event`, so we catch the

`PartialStateConflictError` there and retry just once.

`_process_received_pdu` is called by `on_receive_pdu` for incoming

events and `_process_pulled_event` for backfill. The latter should never

try to persist partial state events, so we ignore it. We catch the

`PartialStateConflictError` in `on_receive_pdu` and retry just once.

Refering to the graph of code paths in

https://github.com/matrix-org/synapse/issues/12988#issuecomment-1156857648

may make the above make more sense.

Signed-off-by: Sean Quah <seanq@matrix.org>

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Remove redundant references to `event_edges.room_id`

We don't need to care about the room_id here, because we are already checking

the event id.

* Clean up the event_edges table

We make a number of changes to `event_edges`:

* We give the `room_id` and `is_state` columns defaults (null and false

respectively) so that we can stop populating them.

* We drop any rows that have `is_state` set true - they should no longer

exist.

* We drop any rows that do not exist in `events` - these should not exist

either.

* We drop the old unique constraint on all the colums, which wasn't much use.

* We create a new unique index on `(event_id, prev_event_id)`.

* We add a foreign key constraint to `events`.

These happen rather differently depending on whether we are on Postgres or

SQLite. For SQLite, we just rebuild the whole table, copying only the rows we

want to keep. For Postgres, we try to do things in the background as much as

possible.

* Stop populating `event_edges.room_id` and `is_state`

We can just rely on the defaults.

|

| | |

|

| |

|

|

|

|

|

|

|

|

| |

Implements the following behind an experimental configuration flag:

* A new push rule kind for mutually related events.

* A new default push rule (`.m.rule.thread_reply`) under an unstable prefix.

This is missing part of MSC3772:

* The `.m.rule.thread_reply_to_me` push rule, this depends on MSC3664 / #11804.

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

| |

Parse the `m.relates_to` event content field (which describes relations)

in a single place, this is used during:

* Event persistence.

* Validation of the Client-Server API.

* Fetching bundled aggregations.

* Processing of push rules.

Each of these separately implement the logic and each made slightly

different assumptions about what was valid. Some had minor / potential

bugs.

|

| |

|

|

|

|

|

|

|

|

| |

Refactor how the `EventContext` class works, with the intention of reducing the amount of state we fetch from the DB during event processing.

The idea here is to get rid of the cached `current_state_ids` and `prev_state_ids` that live in the `EventContext`, and instead defer straight to the database (and its caching).

One change that may have a noticeable effect is that we now no longer prefill the `get_current_state_ids` cache on a state change. However, that query is relatively light, since its just a case of reading a table from the DB (unlike fetching state at an event which is more heavyweight). For deployments with workers this cache isn't even used.

Part of #12684

|

| | |

|

| |

|

|

|

| |

This table is never read, since #11794. We stop writing to it; in future we can

drop it altogether.

|

| | |

|

| |

|

|

|

| |

This works by taking a row level lock on the `rooms` table at the start of both transactions, ensuring that they don't run at the same time. In the event persistence transaction we also check that there is an entry still in the `rooms` table.

I can't figure out how to do this in SQLite. I was just going to lock the table, but it seems that we don't support that in SQLite either, so I'm *really* confused as to how we maintain integrity in SQLite when using `lock_table`....

|

| |

|

|

|

| |

We work through all the events with partial state, updating the state at each

of them. Once it's done, we recalculate the state for the whole room, and then

mark the room as having complete state.

|

| |

|

|

|

| |

Removes references to unstable thread relation, unstable

identifiers for filtering parameters, and the experimental

config flag.

|

| |

|

|

| |

Fixes a bug introduced in #11417 where we would only included backfilled events

in `synapse_event_persisted_position`

|

| |

|

|

|

|

|

| |

Principally, `prometheus_client.REGISTRY.register` now requires its argument to

extend `prometheus_client.Collector`.

Additionally, `Gauge.set` is now annotated so that passing `Optional[int]`

causes an error.

|

| |

|

|

|

| |

This should speed up push rule calculations for rooms with large numbers of local users when the main push rule cache fails.

Co-authored-by: reivilibre <oliverw@matrix.org>

|

| |

|

|

| |

The unstable identifiers are still supported if the experimental configuration

flag is enabled. The unstable identifiers will be removed in a future release.

|

| |

|

|

|

|

|

|

|

| |

This is allowed per MSC2675, although the original implementation did

not allow for it and would return an empty chunk / not bundle aggregations.

The main thing to improve is that the various caches get cleared properly

when an event is redacted, and that edits must not leak if the original

event is redacted (as that would presumably leak something similar to

the original event content).

|

| |

|

|

|

| |

The caches for the target of the relation must be cleared

so that the bundled aggregations are re-calculated after

the redaction is processed.

|

| |

|

|

|

|

|

|

|

|

|

|

| |

When we get a partial_state response from send_join, store information in the

database about it:

* store a record about the room as a whole having partial state, and stash the

list of member servers too.

* flag the join event itself as having partial state

* also, for any new events whose prev-events are partial-stated, note that

they will *also* be partial-stated.

We don't yet make any attempt to interpret this data, so API calls (and a bunch

of other things) are just going to get incorrect data.

|

| |

|

| |

Signed-off-by: Sean Quah <seanq@element.io>

|

| |

|

|

|

|

|

| |

Don't attempt to add non-string `value`s to `event_search` and add a

background update to clear out bad rows from `event_search` when

using sqlite.

Signed-off-by: Sean Quah <seanq@element.io>

|

| |

|

|

|

|

|

| |

When the server leaves a room the `get_rooms_for_user` cache is not

correctly invalidated for the remote users in the room. This means that

subsequent calls to `get_rooms_for_user` for the remote users would

incorrectly include the room (it shouldn't be included because the

server no longer knows anything about the room).

|

| |

|

|

|

| |

This should reduce database usage when fetching bundled aggregations

as the number of individual queries (and round trips to the database) are

reduced.

|

| |

|

|

|

| |

This should reduce database usage when fetching bundled aggregations

as the number of individual queries (and round trips to the database) are

reduced.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

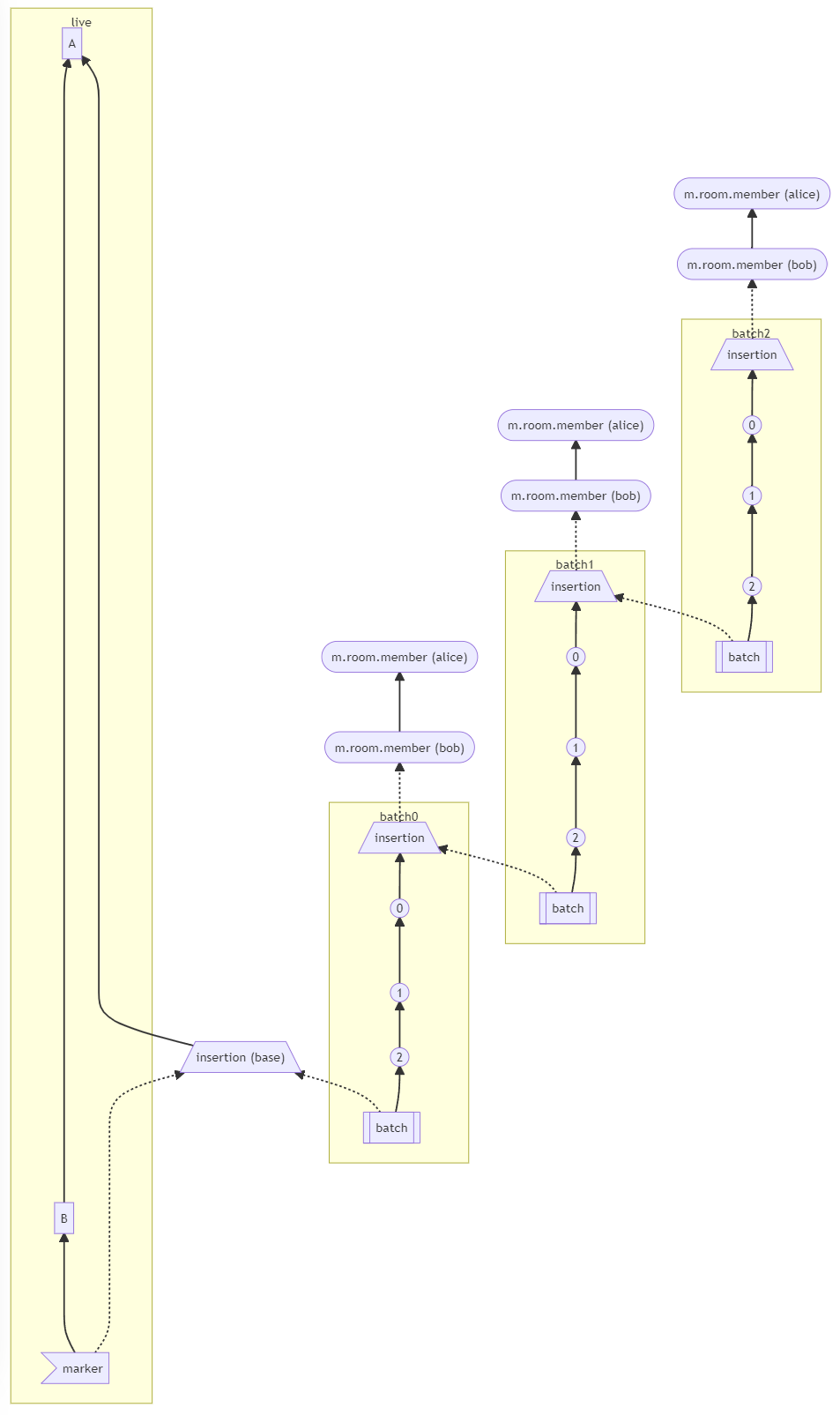

(MSC2716) (#11114)

Fix https://github.com/matrix-org/synapse/issues/11091

Fix https://github.com/matrix-org/synapse/issues/10764 (side-stepping the issue because we no longer have to deal with `fake_prev_event_id`)

1. Made the `/backfill` response return messages in `(depth, stream_ordering)` order (previously only sorted by `depth`)

- Technically, it shouldn't really matter how `/backfill` returns things but I'm just trying to make the `stream_ordering` a little more consistent from the origin to the remote homeservers in order to get the order of messages from `/messages` consistent ([sorted by `(topological_ordering, stream_ordering)`](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)).

- Even now that we return backfilled messages in order, it still doesn't guarantee the same `stream_ordering` (and more importantly the [`/messages` order](https://github.com/matrix-org/synapse/blob/develop/docs/development/room-dag-concepts.md#depth-and-stream-ordering)) on the other server. For example, if a room has a bunch of history imported and someone visits a permalink to a historical message back in time, their homeserver will skip over the historical messages in between and insert the permalink as the next message in the `stream_order` and totally throw off the sort.

- This will be even more the case when we add the [MSC3030 jump to date API endpoint](https://github.com/matrix-org/matrix-doc/pull/3030) so the static archives can navigate and jump to a certain date.

- We're solving this in the future by switching to [online topological ordering](https://github.com/matrix-org/gomatrixserverlib/issues/187) and [chunking](https://github.com/matrix-org/synapse/issues/3785) which by its nature will apply retroactively to fix any inconsistencies introduced by people permalinking

2. As we're navigating `prev_events` to return in `/backfill`, we order by `depth` first (newest -> oldest) and now also tie-break based on the `stream_ordering` (newest -> oldest). This is technically important because MSC2716 inserts a bunch of historical messages at the same `depth` so it's best to be prescriptive about which ones we should process first. In reality, I think the code already looped over the historical messages as expected because the database is already in order.

3. Making the historical state chain and historical event chain float on their own by having no `prev_events` instead of a fake `prev_event` which caused backfill to get clogged with an unresolvable event. Fixes https://github.com/matrix-org/synapse/issues/11091 and https://github.com/matrix-org/synapse/issues/10764

4. We no longer find connected insertion events by finding a potential `prev_event` connection to the current event we're iterating over. We now solely rely on marker events which when processed, add the insertion event as an extremity and the federating homeserver can ask about it when time calls.

- Related discussion, https://github.com/matrix-org/synapse/pull/11114#discussion_r741514793

Before | After

--- | ---

|

#### Why aren't we sorting topologically when receiving backfill events?

> The main reason we're going to opt to not sort topologically when receiving backfill events is because it's probably best to do whatever is easiest to make it just work. People will probably have opinions once they look at [MSC2716](https://github.com/matrix-org/matrix-doc/pull/2716) which could change whatever implementation anyway.

>

> As mentioned, ideally we would do this but code necessary to make the fake edges but it gets confusing and gives an impression of “just whyyyy” (feels icky). This problem also dissolves with online topological ordering.

>

> -- https://github.com/matrix-org/synapse/pull/11114#discussion_r741517138

See https://github.com/matrix-org/synapse/pull/11114#discussion_r739610091 for the technical difficulties

|

| |

|

|

| |

... and start populating them for new events

|

| |

|

|

| |

A couple of surprises for me here, so thought I'd document them

|

| |

|

|

|

|

| |

Per updates to MSC3440.

This is implement as a separate method since it needs to be cached

on a per-user basis, instead of a per-thread basis.

|

| |

|

|

|

|

|

| |

I've never found this terribly useful. I think it was added in the early days

of Synapse, without much thought as to what would actually be useful to log,

and has just been cargo-culted ever since.

Rather, it tends to clutter up debug logs with useless information.

|

| |

|

|

| |

This should be (slightly) more efficient and it is simpler

to have a single method for inserting multiple values.

|

| | |

|

| |

|

| |

To improve type hints throughout the code.

|

| | |

|

| |

|

|

|

|

| |

Create a new dict helper method `simple_insert_many_values_txn`, which takes

raw row values, rather than {key=>value} dicts. This saves us a bunch of dict

munging, and makes it easier to use generators rather than creating

intermediate lists and dicts.

|

| |

|

| |

this field is never read, so we may as well stop populating it.

|

| | |

|

| |

|

|

|

| |

We're going to add a `state_key` column to the `events` table, so we need to

add some disambiguation to queries which use it.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

(`_persist_events_and_state_updates`) (#11417)

Part of https://github.com/matrix-org/synapse/issues/11300

Call stack:

- `_persist_events_and_state_updates` (added `use_negative_stream_ordering`)

- `_persist_events_txn`

- `_update_room_depths_txn` (added `update_room_forward_stream_ordering`)

- `_update_metadata_tables_txn`

- `_store_room_members_txn` (added `inhibit_local_membership_updates`)

Using keyword-only arguments (`*`) to reduce the mistakes from `backfilled` being left as a positional argument somewhere and being interpreted wrong by our new arguments.

|

| |

|

|

| |

Also refactor the stream ID trackers/generators a bit and try to

document them better.

|

| |

|

|

|

| |

Instead of only known relation types. This also reworks the background

update for thread relations to crawl events and search for any relation

type, not just threaded relations.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

storing in DB (#11230)

* change display names/avatar URLS to None if they contain null bytes

* add changelog

* add POC test, requested changes

* add a saner test and remove old one

* update test to verify that display name has been changed to None

* make test less fragile

|

| | |

|

| |

|

|

| |

Adds experimental support for MSC3440's `io.element.thread` relation

type (and the aggregation for it).

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

(MSC2716) (#10975)

Resolve and share `state_groups` for all historical events in batch. This also helps for showing the appropriate avatar/displayname in Element and will work whenever `/messages` has one of the historical messages as the first message in the batch.

This does have the flaw where if you just insert a single historical event somewhere, it probably won't resolve the state correctly from `/messages` or `/context` since it will grab a non historical event above or below with resolved state which never included the historical state back then. For the same reasions, this also does not work in Element between the transition from actual messages to historical messages. In the Gitter case, this isn't really a problem since all of the historical messages are in one big lump at the beginning of the room.

For a future iteration, might be good to look at `/messages` and `/context` to additionally add the `state` for any historical messages in that batch.

---

How are the `state_groups` shared? To illustrate the `state_group` sharing, see this example:

**Before** (new `state_group` for every event 😬, very inefficient):

```

# Tests from https://github.com/matrix-org/complement/pull/206

$ COMPLEMENT_ALWAYS_PRINT_SERVER_LOGS=1 COMPLEMENT_DIR=../complement ./scripts-dev/complement.sh TestBackfillingHistory/parallel/should_resolve_member_state_events_for_historical_events

create_new_client_event m.room.member event=$_JXfwUDIWS6xKGG4SmZXjSFrizhARM7QblhATVWWUcA state_group=None

create_new_client_event org.matrix.msc2716.insertion event=$1ZBfmBKEjg94d-vGYymKrVYeghwBOuGJ3wubU1-I9y0 state_group=9

create_new_client_event org.matrix.msc2716.insertion event=$Mq2JvRetTyclPuozRI682SAjYp3GqRuPc8_cH5-ezPY state_group=10

create_new_client_event m.room.message event=$MfmY4rBQkxrIp8jVwVMTJ4PKnxSigpG9E2cn7S0AtTo state_group=11

create_new_client_event m.room.message event=$uYOv6V8wiF7xHwOMt-60d1AoOIbqLgrDLz6ZIQDdWUI state_group=12

create_new_client_event m.room.message event=$PAbkJRMxb0bX4A6av463faiAhxkE3FEObM1xB4D0UG4 state_group=13

create_new_client_event org.matrix.msc2716.batch event=$Oy_S7AWN7rJQe_MYwGPEy6RtbYklrI-tAhmfiLrCaKI state_group=14

```

**After** (all events in batch sharing `state_group=10`) (the base insertion event has `state_group=8` which matches the `prev_event` we're inserting next to):

```

# Tests from https://github.com/matrix-org/complement/pull/206

$ COMPLEMENT_ALWAYS_PRINT_SERVER_LOGS=1 COMPLEMENT_DIR=../complement ./scripts-dev/complement.sh TestBackfillingHistory/parallel/should_resolve_member_state_events_for_historical_events

create_new_client_event m.room.member event=$PWomJ8PwENYEYuVNoG30gqtybuQQSZ55eldBUSs0i0U state_group=None

create_new_client_event org.matrix.msc2716.insertion event=$e_mCU7Eah9ABF6nQU7lu4E1RxIWccNF05AKaTT5m3lw state_group=9

create_new_client_event org.matrix.msc2716.insertion event=$ui7A3_GdXIcJq0C8GpyrF8X7B3DTjMd_WGCjogax7xU state_group=10

create_new_client_event m.room.message event=$EnTIM5rEGVezQJiYl62uFBl6kJ7B-sMxWqe2D_4FX1I state_group=10

create_new_client_event m.room.message event=$LGx5jGONnBPuNhAuZqHeEoXChd9ryVkuTZatGisOPjk state_group=10

create_new_client_event m.room.message event=$wW0zwoN50lbLu1KoKbybVMxLbKUj7GV_olozIc5i3M0 state_group=10

create_new_client_event org.matrix.msc2716.batch event=$5ZB6dtzqFBCEuMRgpkU201Qhx3WtXZGTz_YgldL6JrQ state_group=10

```

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

versions (#10962)

We correctly allowed using the MSC2716 batch endpoint for

the room creator in existing room versions but accidentally didn't track

the events because of a logic flaw.

This prevented you from connecting subsequent chunks together because it would

throw the unknown batch ID error.

We only want to process MSC2716 events when:

- The room version supports MSC2716

- Any room where the homeserver has the `msc2716_enabled` experimental feature enabled and the event is from the room creator

|

| | |

|

| |

|

|

|

| |

Before Synapse 1.31 (#9411), we relied on `outlier` being stored in the

`internal_metadata` column. We can now assume nobody will roll back their

deployment that far and drop the legacy support.

|

| |

|

|

|

|

|

|

| |

endpoint (#10838)

See https://github.com/matrix-org/matrix-doc/pull/2716#discussion_r684574497

Dropping support for older MSC2716 room versions so we don't have to worry about

supporting both chunk and batch events.

|

| | |

|

| |

|

|

|

| |

We've already batched up the events previously, and assume in other

places in the events.py file that we have. Removing this makes it easier

to adjust the batch sizes in one place.

|

| |

|

|

| |

Outlier events don't ever have push actions associated with them, so we

can skip some expensive queries during event persistence.

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

(#10566)

* Allow room creator to send MSC2716 related events in existing room versions

Discussed at https://github.com/matrix-org/matrix-doc/pull/2716/#discussion_r682474869

Restoring `get_create_event_for_room_txn` from,

https://github.com/matrix-org/synapse/pull/10245/commits/44bb3f0cf5cb365ef9281554daceeecfb17cc94d

* Add changelog

* Stop people from trying to redact MSC2716 events in unsupported room versions

* Populate rooms.creator column for easy lookup

> From some [out of band discussion](https://matrix.to/#/!UytJQHLQYfvYWsGrGY:jki.re/$p2fKESoFst038x6pOOmsY0C49S2gLKMr0jhNMz_JJz0?via=jki.re&via=matrix.org), my plan is to use `rooms.creator`. But currently, we don't fill in `creator` for remote rooms when a user is invited to a room for example. So we need to add some code to fill in `creator` wherever we add to the `rooms` table. And also add a background update to fill in the rows missing `creator` (we can use the same logic that `get_create_event_for_room_txn` is doing by looking in the state events to get the `creator`).

>

> https://github.com/matrix-org/synapse/pull/10566#issuecomment-901616642

* Remove and switch away from get_create_event_for_room_txn

* Fix no create event being found because no state events persisted yet

* Fix and add tests for rooms creator bg update

* Populate rooms.creator field for easy lookup

Part of https://github.com/matrix-org/synapse/pull/10566

- Fill in creator whenever we insert into the rooms table

- Add background update to backfill any missing creator values

* Add changelog

* Fix usage

* Remove extra delta already included in #10697

* Don't worry about setting creator for invite

* Only iterate over rows missing the creator

See https://github.com/matrix-org/synapse/pull/10697#discussion_r695940898

* Use constant to fetch room creator field

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696803029

* More protection from other random types

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696806853

* Move new background update to end of list

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696814181

* Fix query casing

* Fix ambiguity iterating over cursor instead of list

Fix `psycopg2.ProgrammingError: no results to fetch` error

when tests run with Postgres.

```

SYNAPSE_POSTGRES=1 SYNAPSE_TEST_LOG_LEVEL=INFO python -m twisted.trial tests.storage.databases.main.test_room

```

---

We use `txn.fetchall` because it will return the results as a

list or an empty list when there are no results.

Docs:

> `cursor` objects are iterable, so, instead of calling explicitly fetchone() in a loop, the object itself can be used:

>

> https://www.psycopg.org/docs/cursor.html#cursor-iterable

And I'm guessing iterating over a raw cursor does something weird when there are no results.

---

Test CI failure: https://github.com/matrix-org/synapse/pull/10697/checks?check_run_id=3468916530

```

tests.test_visibility.FilterEventsForServerTestCase.test_large_room

===============================================================================

[FAIL]

Traceback (most recent call last):

File "/home/runner/work/synapse/synapse/tests/storage/databases/main/test_room.py", line 85, in test_background_populate_rooms_creator_column

self.get_success(

File "/home/runner/work/synapse/synapse/tests/unittest.py", line 500, in get_success

return self.successResultOf(d)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/trial/_synctest.py", line 700, in successResultOf

self.fail(

twisted.trial.unittest.FailTest: Success result expected on <Deferred at 0x7f4022f3eb50 current result: None>, found failure result instead:

Traceback (most recent call last):

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 701, in errback

self._startRunCallbacks(fail)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 764, in _startRunCallbacks

self._runCallbacks()

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 858, in _runCallbacks

current.result = callback( # type: ignore[misc]

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 1751, in gotResult

current_context.run(_inlineCallbacks, r, gen, status)

--- <exception caught here> ---

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 1657, in _inlineCallbacks

result = current_context.run(

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/python/failure.py", line 500, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/runner/work/synapse/synapse/synapse/storage/background_updates.py", line 224, in do_next_background_update

await self._do_background_update(desired_duration_ms)

File "/home/runner/work/synapse/synapse/synapse/storage/background_updates.py", line 261, in _do_background_update

items_updated = await update_handler(progress, batch_size)

File "/home/runner/work/synapse/synapse/synapse/storage/databases/main/room.py", line 1399, in _background_populate_rooms_creator_column

end = await self.db_pool.runInteraction(

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 686, in runInteraction

result = await self.runWithConnection(

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 791, in runWithConnection

return await make_deferred_yieldable(

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/internet/defer.py", line 858, in _runCallbacks

current.result = callback( # type: ignore[misc]

File "/home/runner/work/synapse/synapse/tests/server.py", line 425, in <lambda>

d.addCallback(lambda x: function(*args, **kwargs))

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/enterprise/adbapi.py", line 293, in _runWithConnection

compat.reraise(excValue, excTraceback)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/python/deprecate.py", line 298, in deprecatedFunction

return function(*args, **kwargs)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/python/compat.py", line 404, in reraise

raise exception.with_traceback(traceback)

File "/home/runner/work/synapse/synapse/.tox/py/lib/python3.9/site-packages/twisted/enterprise/adbapi.py", line 284, in _runWithConnection

result = func(conn, *args, **kw)

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 786, in inner_func

return func(db_conn, *args, **kwargs)

File "/home/runner/work/synapse/synapse/synapse/storage/database.py", line 554, in new_transaction

r = func(cursor, *args, **kwargs)

File "/home/runner/work/synapse/synapse/synapse/storage/databases/main/room.py", line 1375, in _background_populate_rooms_creator_column_txn

for room_id, event_json in txn:

psycopg2.ProgrammingError: no results to fetch

```

* Move code not under the MSC2716 room version underneath an experimental config option

See https://github.com/matrix-org/synapse/pull/10566#issuecomment-906437909

* Add ordering to rooms creator background update

See https://github.com/matrix-org/synapse/pull/10697#discussion_r696815277

* Add comment to better document constant

See https://github.com/matrix-org/synapse/pull/10697#discussion_r699674458

* Use constant field

|

| |

|

|

| |

This will only happen when a server has multiple out of band membership

events in a single room.

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Make historical messages available to federated servers

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

Follow-up to https://github.com/matrix-org/synapse/pull/9247

* Debug message not available on federation

* Add base starting insertion point when no chunk ID is provided

* Fix messages from multiple senders in historical chunk

Follow-up to https://github.com/matrix-org/synapse/pull/9247

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

---

Previously, Synapse would throw a 403,

`Cannot force another user to join.`,

because we were trying to use `?user_id` from a single virtual user

which did not match with messages from other users in the chunk.

* Remove debug lines

* Messing with selecting insertion event extremeties

* Move db schema change to new version

* Add more better comments

* Make a fake requester with just what we need

See https://github.com/matrix-org/synapse/pull/10276#discussion_r660999080

* Store insertion events in table

* Make base insertion event float off on its own

See https://github.com/matrix-org/synapse/pull/10250#issuecomment-875711889

Conflicts:

synapse/rest/client/v1/room.py

* Validate that the app service can actually control the given user

See https://github.com/matrix-org/synapse/pull/10276#issuecomment-876316455

Conflicts:

synapse/rest/client/v1/room.py

* Add some better comments on what we're trying to check for

* Continue debugging

* Share validation logic

* Add inserted historical messages to /backfill response

* Remove debug sql queries

* Some marker event implemntation trials

* Clean up PR

* Rename insertion_event_id to just event_id

* Add some better sql comments

* More accurate description

* Add changelog

* Make it clear what MSC the change is part of

* Add more detail on which insertion event came through

* Address review and improve sql queries

* Only use event_id as unique constraint

* Fix test case where insertion event is already in the normal DAG

* Remove debug changes

* Add support for MSC2716 marker events

* Process markers when we receive it over federation

* WIP: make hs2 backfill historical messages after marker event

* hs2 to better ask for insertion event extremity

But running into the `sqlite3.IntegrityError: NOT NULL constraint failed: event_to_state_groups.state_group`

error

* Add insertion_event_extremities table

* Switch to chunk events so we can auth via power_levels

Previously, we were using `content.chunk_id` to connect one

chunk to another. But these events can be from any `sender`

and we can't tell who should be able to send historical events.

We know we only want the application service to do it but these

events have the sender of a real historical message, not the

application service user ID as the sender. Other federated homeservers

also have no indicator which senders are an application service on

the originating homeserver.

So we want to auth all of the MSC2716 events via power_levels

and have them be sent by the application service with proper

PL levels in the room.

* Switch to chunk events for federation

* Add unstable room version to support new historical PL

* Messy: Fix undefined state_group for federated historical events

```

2021-07-13 02:27:57,810 - synapse.handlers.federation - 1248 - ERROR - GET-4 - Failed to backfill from hs1 because NOT NULL constraint failed: event_to_state_groups.state_group

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 1216, in try_backfill

await self.backfill(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 1035, in backfill

await self._auth_and_persist_event(dest, event, context, backfilled=True)

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 2222, in _auth_and_persist_event

await self._run_push_actions_and_persist_event(event, context, backfilled)

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 2244, in _run_push_actions_and_persist_event

await self.persist_events_and_notify(

File "/usr/local/lib/python3.8/site-packages/synapse/handlers/federation.py", line 3290, in persist_events_and_notify

events, max_stream_token = await self.storage.persistence.persist_events(

File "/usr/local/lib/python3.8/site-packages/synapse/logging/opentracing.py", line 774, in _trace_inner

return await func(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/persist_events.py", line 320, in persist_events

ret_vals = await yieldable_gather_results(enqueue, partitioned.items())

File "/usr/local/lib/python3.8/site-packages/synapse/storage/persist_events.py", line 237, in handle_queue_loop

ret = await self._per_item_callback(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/persist_events.py", line 577, in _persist_event_batch

await self.persist_events_store._persist_events_and_state_updates(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/databases/main/events.py", line 176, in _persist_events_and_state_updates

await self.db_pool.runInteraction(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 681, in runInteraction

result = await self.runWithConnection(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 770, in runWithConnection

return await make_deferred_yieldable(

File "/usr/local/lib/python3.8/site-packages/twisted/python/threadpool.py", line 238, in inContext

result = inContext.theWork() # type: ignore[attr-defined]

File "/usr/local/lib/python3.8/site-packages/twisted/python/threadpool.py", line 254, in <lambda>

inContext.theWork = lambda: context.call( # type: ignore[attr-defined]

File "/usr/local/lib/python3.8/site-packages/twisted/python/context.py", line 118, in callWithContext

return self.currentContext().callWithContext(ctx, func, *args, **kw)

File "/usr/local/lib/python3.8/site-packages/twisted/python/context.py", line 83, in callWithContext

return func(*args, **kw)

File "/usr/local/lib/python3.8/site-packages/twisted/enterprise/adbapi.py", line 293, in _runWithConnection

compat.reraise(excValue, excTraceback)

File "/usr/local/lib/python3.8/site-packages/twisted/python/deprecate.py", line 298, in deprecatedFunction

return function(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/twisted/python/compat.py", line 403, in reraise

raise exception.with_traceback(traceback)

File "/usr/local/lib/python3.8/site-packages/twisted/enterprise/adbapi.py", line 284, in _runWithConnection

result = func(conn, *args, **kw)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 765, in inner_func

return func(db_conn, *args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 549, in new_transaction

r = func(cursor, *args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/logging/utils.py", line 69, in wrapped

return f(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/databases/main/events.py", line 385, in _persist_events_txn

self._store_event_state_mappings_txn(txn, events_and_contexts)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/databases/main/events.py", line 2065, in _store_event_state_mappings_txn

self.db_pool.simple_insert_many_txn(

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 923, in simple_insert_many_txn

txn.execute_batch(sql, vals)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 280, in execute_batch

self.executemany(sql, args)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 300, in executemany

self._do_execute(self.txn.executemany, sql, *args)

File "/usr/local/lib/python3.8/site-packages/synapse/storage/database.py", line 330, in _do_execute

return func(sql, *args)

sqlite3.IntegrityError: NOT NULL constraint failed: event_to_state_groups.state_group

```

* Revert "Messy: Fix undefined state_group for federated historical events"

This reverts commit 187ab28611546321e02770944c86f30ee2bc742a.

* Fix federated events being rejected for no state_groups

Add fix from https://github.com/matrix-org/synapse/pull/10439

until it merges.

* Adapting to experimental room version

* Some log cleanup

* Add better comments around extremity fetching code and why

* Rename to be more accurate to what the function returns

* Add changelog

* Ignore rejected events

* Use simplified upsert

* Add Erik's explanation of extra event checks

See https://github.com/matrix-org/synapse/pull/10498#discussion_r680880332

* Clarify that the depth is not directly correlated to the backwards extremity that we return

See https://github.com/matrix-org/synapse/pull/10498#discussion_r681725404

* lock only matters for sqlite

See https://github.com/matrix-org/synapse/pull/10498#discussion_r681728061

* Move new SQL changes to its own delta file

* Clean up upsert docstring

* Bump database schema version (62)

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

scrollback history (MSC2716) (#10245)

* Make historical messages available to federated servers

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

Follow-up to https://github.com/matrix-org/synapse/pull/9247

* Debug message not available on federation

* Add base starting insertion point when no chunk ID is provided

* Fix messages from multiple senders in historical chunk

Follow-up to https://github.com/matrix-org/synapse/pull/9247

Part of MSC2716: https://github.com/matrix-org/matrix-doc/pull/2716

---

Previously, Synapse would throw a 403,

`Cannot force another user to join.`,

because we were trying to use `?user_id` from a single virtual user

which did not match with messages from other users in the chunk.

* Remove debug lines

* Messing with selecting insertion event extremeties

* Move db schema change to new version

* Add more better comments

* Make a fake requester with just what we need

See https://github.com/matrix-org/synapse/pull/10276#discussion_r660999080

* Store insertion events in table

* Make base insertion event float off on its own

See https://github.com/matrix-org/synapse/pull/10250#issuecomment-875711889

Conflicts:

synapse/rest/client/v1/room.py

* Validate that the app service can actually control the given user

See https://github.com/matrix-org/synapse/pull/10276#issuecomment-876316455

Conflicts:

synapse/rest/client/v1/room.py

* Add some better comments on what we're trying to check for

* Continue debugging

* Share validation logic

* Add inserted historical messages to /backfill response

* Remove debug sql queries

* Some marker event implemntation trials

* Clean up PR

* Rename insertion_event_id to just event_id

* Add some better sql comments

* More accurate description

* Add changelog

* Make it clear what MSC the change is part of

* Add more detail on which insertion event came through

* Address review and improve sql queries

* Only use event_id as unique constraint

* Fix test case where insertion event is already in the normal DAG

* Remove debug changes

* Switch to chunk events so we can auth via power_levels

Previously, we were using `content.chunk_id` to connect one

chunk to another. But these events can be from any `sender`

and we can't tell who should be able to send historical events.

We know we only want the application service to do it but these

events have the sender of a real historical message, not the

application service user ID as the sender. Other federated homeservers

also have no indicator which senders are an application service on

the originating homeserver.

So we want to auth all of the MSC2716 events via power_levels

and have them be sent by the application service with proper

PL levels in the room.

* Switch to chunk events for federation

* Add unstable room version to support new historical PL

* Fix federated events being rejected for no state_groups

Add fix from https://github.com/matrix-org/synapse/pull/10439

until it merges.

* Only connect base insertion event to prev_event_ids

Per discussion with @erikjohnston,

https://matrix.to/#/!UytJQHLQYfvYWsGrGY:jki.re/$12bTUiObDFdHLAYtT7E-BvYRp3k_xv8w0dUQHibasJk?via=jki.re&via=matrix.org

* Make it possible to get the room_version with txn

* Allow but ignore historical events in unsupported room version

See https://github.com/matrix-org/synapse/pull/10245#discussion_r675592489

We can't reject historical events on unsupported room versions because homeservers without knowledge of MSC2716 or the new room version don't reject historical events either.

Since we can't rely on the auth check here to stop historical events on unsupported room versions, I've added some additional checks in the processing/persisting code (`synapse/storage/databases/main/events.py` -> `_handle_insertion_event` and `_handle_chunk_event`). I've had to do some refactoring so there is method to fetch the room version by `txn`.

* Move to unique index syntax

See https://github.com/matrix-org/synapse/pull/10245#discussion_r675638509

* High-level document how the insertion->chunk lookup works

* Remove create_event fallback for room_versions

See https://github.com/matrix-org/synapse/pull/10245/files#r677641879

* Use updated method name

|

| |

|

|

|

| |

It looks like it was first used and introduced in https://github.com/matrix-org/synapse/commit/5130d80d79fe1f95ce03b8f1cfd4fbf0a32f5ac8#diff-8a4a36a7728107b2ccaff2cb405dbab229a1100fe50653a63d1aa9ac10ae45e8R305 but the

But the usage was removed in https://github.com/matrix-org/synapse/commit/4c6a31cd6efa25be4c9f1b357e8f92065fac63eb#diff-8a4a36a7728107b2ccaff2cb405dbab229a1100fe50653a63d1aa9ac10ae45e8

|

| | |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

* Upsert redactions in case they already exists

Occasionally, in combination with retention, redactions aren't deleted

from the database whenever they are due for deletion. The server will

eventually try to backfill the deleted events and trip over the already

existing redaction events.

Switching to an UPSERT for those events allows us to recover from there

situations. The retention code still needs fixing but that is outside of

my current comfort zone on this code base.

This is related to #8707 where the error was discussed already.

Signed-off-by: Andreas Rammhold <andreas@rammhold.de>

* Also purge redactions when purging events

Previously redacints where left behind leading to backfilling issues

when the server stumbled across the already existing yet to be

backfilled redactions.

This issues has been discussed in #8707.

Signed-off-by: Andreas Rammhold <andreas@rammhold.de>

|

| |

|

|

|

|

|

|

|

|

| |

* Make `invalidate` and `invalidate_many` do the same thing

... so that we can do either over the invalidation replication stream, and also

because they always confused me a bit.

* Kill off `invalidate_many`

* changelog

|

| | |

|

| |

|

|

|

| |

There are a couple of points in `persist_events` where we are doing a

query per event in series, which we can replace.

|

| |

|

|

|

|

|

| |

Part of #9744

Removes all redundant `# -*- coding: utf-8 -*-` lines from files, as python 3 automatically reads source code as utf-8 now.

`Signed-off-by: Jonathan de Jong <jonathan@automatia.nl>`

|

| |

|

|

|

|

|

| |

Part of #9366

Adds in fixes for B006 and B008, both relating to mutable parameter lint errors.

Signed-off-by: Jonathan de Jong <jonathan@automatia.nl>

|

| |

|

|

|

|

|

|

|

|

|

|

| |

* Populate `internal_metadata.outlier` based on `events` table

Rather than relying on `outlier` being in the `internal_metadata` column,

populate it based on the `events.outlier` column.

* Move `outlier` out of InternalMetadata._dict

Ultimately, this will allow us to stop writing it to the database. For now, we

have to grandfather it back in so as to maintain compatibility with older

versions of Synapse.

|

| |

|

| |

The idea here is to stop people forgetting to call `check_consistency`. Folks can still just pass in `None` to the new args in `build_sequence_generator`, but hopefully they won't.

|

| |

|

| |

And ensure the consistency of `event_auth_chain_id`.

|

| |

|

|

|

|

|

| |

- Update black version to the latest

- Run black auto formatting over the codebase

- Run autoformatting according to [`docs/code_style.md

`](https://github.com/matrix-org/synapse/blob/80d6dc9783aa80886a133756028984dbf8920168/docs/code_style.md)

- Update `code_style.md` docs around installing black to use the correct version

|

| | |

|

| | |

|

| |

|

| |

`execute_batch` does fewer round trips in postgres than `executemany`, but does not give a correct `txn.rowcount` result after.

|

| |

|

|

| |

(#9115)

|

| | |

|

| | |

|

| |

|

| |

Co-authored-by: Patrick Cloke <clokep@users.noreply.github.com>

|

| |

|

|

|

|

| |

Not being able to serialise `frozendicts` is fragile, and it's annoying to have

to think about which serialiser you want. There's no real downside to

supporting frozendicts, so let's just have one json encoder.

|

| |

|

| |

Most of these uses don't need a full-blown DeferredCache; LruCache is lighter and more appropriate.

|

| |

|

|

|

| |

* Make sure a retention policy is a state event

* Changelog

|

| |

|

|

|

| |

(#8476)

Should fix #3365.

|

| |

|

|

|

| |

When pulling events out of the DB to send over replication we were not

filtering by instance name, and so we were sending events for other

instances.

|

| |

|

|

|

|

|

|

| |

There's no need for it to be in the dict as well as the events table. Instead,

we store it in a separate attribute in the EventInternalMetadata object, and

populate that on load.

This means that we can rely on it being correctly populated for any event which

has been persited to the database.

|

| | |

|

| | |

|

| |

|

| |

This will allow us to hit the DB after we've finished using the generated stream ID.

|

| |

|

|

|

|

| |

This is *not* ready for production yet. Caveats:

1. We should write some tests...

2. The stream token that we use for events can get stalled at the minimum position of all writers. This means that new events may not be processed and e.g. sent down sync streams if a writer isn't writing or is slow.

|

| | |

|

| |

|

|

|

|

|

| |

* Revert "Add experimental support for sharding event persister. (#8170)"

This reverts commit 82c1ee1c22a87b9e6e3179947014b0f11c0a1ac3.

* Changelog

|

| | |

|

| |

|

|

|

|

| |

This is *not* ready for production yet. Caveats:

1. We should write some tests...

2. The stream token that we use for events can get stalled at the minimum position of all writers. This means that new events may not be processed and e.g. sent down sync streams if a writer isn't writing or is slow.

|

| |

|

|

| |

This is mainly so that `StreamIdGenerator` and `MultiWriterIdGenerator`

will have the same interface, allowing them to be used interchangeably.

|

| | |

|

| | |

|

| |

|